Back to: ASP.NET Core Web API Tutorials

NCache Cluster and Its Types in Distributed Caching

In this article, I will discuss the NCache Cluster and Its Types in Distributed Caching. Please read our previous articles discussing the Difference Between In-Memory Caching and Distributed Caching in ASP.NET Core Applications. NCache is an in-memory distributed caching designed to boost the performance and scalability of .NET applications by storing application data in memory.

Clusters, Cache Server, Nodes, Data, and Partitions:

Understanding the terms Clusters, Cache Server, Nodes, Data, and Partitions within the context of NCache helps in understanding how this system works:

Clusters: A cluster in NCache refers to a group of Cache Servers (Each Cache Server is composed of nodes (Each node will store a specific partition of cache data)) configured to work together as a single logical unit. Clustering enhances the performance, scalability, and availability of the cache. If one node fails, others can take over its workload without losing data or service continuity.

Cache Server: In the context of NCache, a Cache Server refers to a server instance that hosts and manages a part of the distributed cache. Each cache server is responsible for maintaining the cache’s availability, ensuring data integrity, and providing fast access to the cached data.

Nodes: These are the individual instances within the cache server environment. Each cache instance is referred to as a Node. If we run multiple instances of NCache in a Cache Server, we can say the Cache Server is composed of Multiple Nodes. Each node hosts a portion of the distributed cache. Nodes communicate with each other to synchronize data, handle load balancing, and provide failover capabilities.

Data: In the context of NCache, data refers to the application data objects stored in the cache. These objects can be anything from simple key-value pairs to complex objects. Storing data in the cache reduces the need for applications to retrieve data from slower, disk-based databases, thereby improving performance.

Partitions: Partitioning in NCache is a method of distributing data across different nodes in the cluster. Each partition typically holds a unique subset of the total cache data, ensuring that the data is evenly distributed across the cluster. If a node goes down, only the partitions on that node are affected, and their data can be recovered from replicas if replication is configured.

What is Cluster in NCache?

A Cluster in NCache refers to a group of Cache Servers that work together to form a single logical cache unit. Clustering is a fundamental feature that provides Scalability, Load Balancing, and High Availability in a Distributed Caching System. It allows NCache to distribute data and cache workload across multiple servers, enhancing the performance and reliability of applications relying on the cache.

These Cache Servers are generally not located on the same physical machine; instead, they are distributed across multiple machines.

- Scalability: By distributing the cache across multiple servers, the system can handle more data and more requests than a single server could. This makes it possible to scale the application by simply adding more servers to the cluster.

- Fault Tolerance: Distributing the cache across different servers enhances the system’s reliability. If one server fails, the others in the cluster can continue to operate, thereby maintaining the cache’s availability.

- Load Balancing: A cluster can distribute the load across several servers, preventing any single server from becoming a bottleneck. This helps in maintaining optimal performance across the system.

- Geographical Distribution: Sometimes, cache servers are strategically placed in different locations to reduce latency for users distributed geographically. This setup ensures faster access times for users who are spread out.

Types of NCache Clusters in Distributed Caching

NCache offers several types of clustering configurations, each designed for specific scenarios and needs:

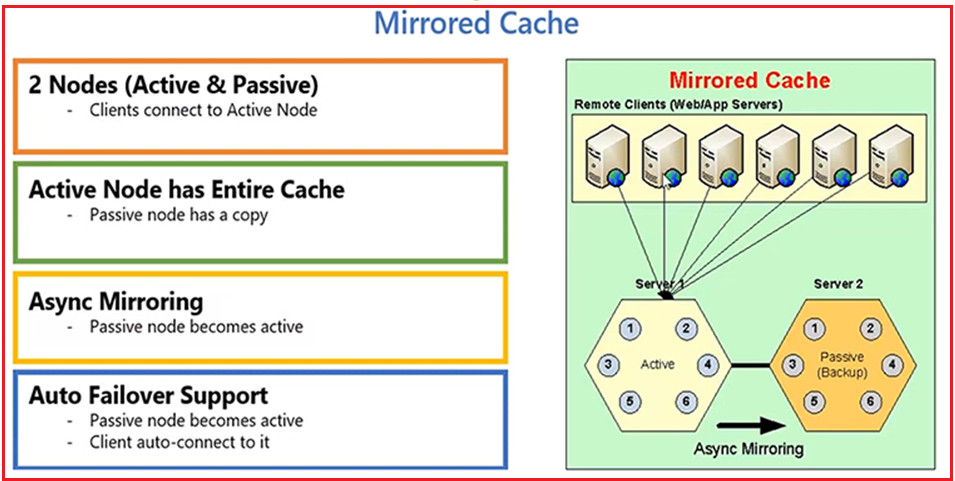

- Mirrored Cache: This configuration consists of two nodes, one of which is the active node and the other a passive standby that mirrors the active. If the active node fails, the passive node takes over, minimizing downtime and data loss.

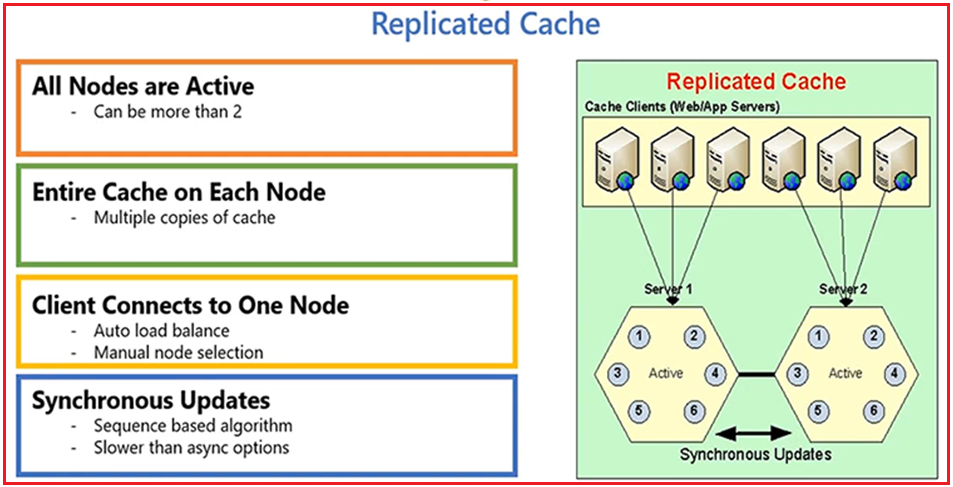

- Replicated Cache: In this setup, each node in the cluster holds a copy of the entire cache. This ensures high availability and quick recovery in case of a node failure, but it can be resource-intensive.

- Partitioned Cache: Data is partitioned across the cluster nodes, with each node storing a fraction of the cache data. This increases scalability and load distribution.

- Partition-Replica Cache: Combining aspects of both partitioned and replicated caches, this configuration partitions data across several nodes and also maintains copies of these partitions on other nodes. This setup balances the load and ensures data availability.

How Does the Mirrored Cache Work in Distributed Caching?

A Mirrored Cache involves two nodes: a primary node and a secondary (or backup) node. These nodes work together to form a high-availability pair. Let’s understand how the Mirror cache works.

- Operation on Primary Node: A write operation (such as adding, updating, or deleting data) is executed on the primary node.

- Asynchronous Mirroring: Once the operation on the primary node is successfully completed, the change is sent to the secondary node. This mirroring is asynchronous, meaning the primary node does not wait for the secondary node to confirm the operation before it continues processing other requests. Asynchronous mirroring is used to enhance performance.

- Data Consistency: The secondary node receives the mirrored data and updates its own cache to reflect the changes made on the primary node. Although this happens very quickly, there is a brief period during which the data on the primary and secondary nodes might be slightly out of sync. So, while asynchronous mirroring provides performance benefits, there is a trade-off regarding data safety in case of a sudden primary node failure; any data that was not yet mirrored to the secondary node could be lost.

- Failover Scenario: If the primary node fails, the secondary node has nearly all the same data (except possibly the last changes that might not have been mirrored yet if the failure was abrupt) and can take over as the primary node to ensure continuity and data availability.

- Purpose: The main purpose of a Mirrored Cache is to provide high availability. If the primary node fails, the secondary node can take over with an exact copy of the cached data, thus minimizing downtime and preventing data loss.

For a better understanding, please have a look at the following diagram:

Mirrored Cache Use When:

- High Availability is a Priority: Mirrored Cache is ideal when your primary concern is minimizing downtime and ensuring that a backup is always ready to take over seamlessly in the event of a failure.

- Simplicity is Required: This setup is simple to manage because it only involves two nodes, which makes it easy to implement and maintain.

- Data Loss Prevention is Crucial: Because each write is immediately mirrored, this setup is excellent for scenarios where losing even a small amount of data is unacceptable.

Typical Scenarios:

- Critical financial transaction systems where every transaction is vital.

- Small-scale deployments where setting up a complex cluster isn’t feasible or necessary.

How Does the Replicated Cache Work in Distributed Caching?

Replicated Caches are designed to ensure that each node within the cluster maintains an exact copy of the cached data, improving data availability and read performance across the system.

Whenever data is written (added, updated, or deleted) to one node, the change is replicated to all other nodes in the cluster. This ensures that each node always has an up-to-date copy of the entire cache. Let’s understand how a Replicated Cache works.

- Write Operation on Any Node: Unlike a Mirrored Cache, where operations are performed at the primary node, in a Replicated Cache, write operations can typically be performed on any node in the cluster. The node that receives the write operation acts as the coordinator to ensure replication for all other nodes.

- Data Propagation: After a write operation is executed on the coordinator node, the change is propagated to all other nodes in the cluster. This propagation is handled synchronously. Each node must acknowledge the update before the operation is marked as complete.

- Failover Scenario: Because each node holds a complete copy of the cache data, the system is highly resilient to individual node failures. Any node can fail without causing data loss, as other nodes continue to serve the full dataset.

- Purpose: The main advantages of a Replicated Cache are high availability and fast read access. Since each node has a full copy of the data, read requests can be handled by any node, thus distributing the load and reducing the read latency.

For a better understanding, please have a look at the following diagram:

Replicated Cache Use When:

- Read Scalability is Important: If your application performs a high number of read operations and you need to distribute these across multiple nodes to improve performance, a Replicated Cache is ideal.

- Data Consistency Across All Nodes is Required: This is useful in scenarios where it’s important that every node has the same view of the data.

- Fault Tolerance is Needed: Since data is replicated across all nodes, the failure of one does not impact the availability of the cache.

Typical Scenarios:

Read-heavy applications like content delivery networks or information retrieval systems frequently require fast access to data from multiple locations.

How Does the Partitioned Cache Work in Distributed Caching?

A Partitioned Cache is a common configuration in distributed caching systems designed to enhance scalability and load balancing by dividing the total cache data among multiple nodes in the cluster. Each node holds only a portion of the data, which allows the cache to scale horizontally as more nodes are added. This setup is particularly effective in environments where the cache size grows significantly, necessitating a distribution of data to manage memory efficiently and maintain fast access times. Here’s how the Partitioned Cache works in detail:

Data Distribution

- Partitioning Logic: In a Partitioned Cache, data is divided into partitions using a consistent hashing mechanism or similar algorithm. Each piece of data, typically identified by a key, is assigned to a specific partition based on this hashing function.

- Node Assignments: Each partition is assigned to a specific node in the cluster. This means every node is responsible for storing and managing a subset of the total cache data.

- Dynamic Rebalancing: When nodes are added or removed from the cluster, the partitions might be rebalanced across the new set of nodes to evenly distribute the load and optimize resource usage. This rebalancing involves migrating data from one node to another, which can be managed automatically by the caching system.

Handling Read and Write Operations

- Read Operations: When a read request is made for a specific key, the system first determines which partition the key belongs to using the hashing function. The request is then routed to the node that holds that partition. If the data is found, it is returned to the requester; if not, a cache miss occurs.

- Write Operations: Writes follow a similar pattern as reads. When data is written to the cache, it is first hashed to determine its partition. The write operation is then executed on the node responsible for that partition. This approach ensures that all data related to a specific key is always handled by the same node, which can help maintain consistency.

Scalability

- Horizontal Scaling: One of the primary advantages of a Partitioned Cache is its ability to scale horizontally. As more nodes are added to the cluster, the data and load can be distributed more widely, allowing the system to handle more requests simultaneously without significantly impacting performance.

- Load Distribution: Since each node only handles a subset of the total cache, the load is naturally balanced across the cluster. This prevents any single node from becoming a bottleneck, enhancing the overall performance of the system.

Fault Tolerance

- Single Points of Failure: If a node fails, all the data on that node could be lost. This is a critical consideration and often necessitates additional configuration.

- Replication for Resilience: To mitigate the risks of data loss, a Partitioned Cache can be combined with replication strategies. For example, each partition might be replicated across multiple nodes (a setup sometimes called Partition-Replica). This ensures that if one node fails, copies of the data are available on other nodes, allowing the system to continue functioning without data loss.

Use Cases

- Large-Scale Applications: Partitioned Caches are ideal for large-scale applications where the amount of data is too large to be stored entirely on a single node or even a few nodes.

- Performance-Critical Systems: Systems that require high throughput and fast access times benefit from Partitioned Caches because the distributed nature of the system reduces latency and increases processing speed.

For a better understanding, please have a look at the following diagram:

Partitioned Cache Use When:

- High Write Performance and Scalability are Needed: Partitioned Cache distributes different parts of the data across many nodes, which helps manage larger datasets efficiently and improves write performance by reducing contention on any single node.

- Linear Scalability is a Requirement: Suitable for environments expecting a linear performance increase as more nodes are added to the cache cluster.

Typical Scenarios:

- E-commerce platforms with large, diverse datasets that require frequent updates and additions.

- Applications with large session data that need to be distributed across multiple servers.

How does the Partition-Replica Cache in Distributed Caching?

The Partition-Replica Cache configuration in NCache combines the advantages of both partitioned and replicated caching strategies to enhance data availability and fault tolerance while maintaining the scalability benefits of a partitioned cache. Here’s a detailed explanation of how the Partition-Replica Cache works within the NCache framework:

Partitioning of Data

- Data Distribution: In a Partition-Replica Cache, the total cache data is divided into several partitions. Each piece of data is assigned to a specific partition based on a consistent hashing mechanism or a similar algorithm. This ensures that data is evenly distributed across the nodes.

- Primary Nodes: Each partition is primarily handled by one node in the cluster, known as the primary node for that partition. The primary node manages all read and write operations for its partition.

Replication of Partitions

- Replica Nodes: Alongside each primary partition, one or more replica partitions are created and assigned to different nodes within the cluster. These replicas serve as backups for the primary partitions.

- Synchronization: Whenever data in a primary partition is modified (through additions, updates, or deletions), these changes are automatically synchronized with the corresponding replica partitions. Depending on the configuration, this replication can be synchronous (where the primary node waits for the replica nodes to acknowledge the updates before proceeding) or asynchronous (where the primary node does not wait for acknowledgments).

Handling Failures

- Failover Mechanism: If a primary node fails, one of the replica nodes containing the backup of the affected partitions can quickly take over as the new primary node. This ensures that the data remains available and accessible even in the event of node failures.

- Data Integrity and Consistency: During the failover process, the system ensures that the most recent and consistent data state is maintained. This might involve reconciling any in-flight updates that were being processed at the time of the failure.

Read and Write Operations

- Read Operations: The primary node of the relevant partition can handle read requests. Depending on the configuration and the system’s requirements, replica nodes can also service reads to distribute the load more evenly, especially in read-heavy environments.

- Write Operations: Writes are always directed to the primary nodes. After processing the writes, the changes are replicated to the corresponding replicas to ensure that all copies of the data are synchronized.

Scalability and Load Balancing

- Horizontal Scaling: As with a simple partitioned cache, adding more nodes to a Partition-Replica Cache allows the system to redistribute partitions and replicas, enhancing the cache’s capacity and performance.

- Load Distribution: The system can distribute both reads and writes across multiple nodes, balancing the load and reducing the risk of bottlenecks. This is particularly useful in high-throughput environments where performance and response times are critical.

Use Cases

- High Availability Systems: Partition-Replica Cache is ideal for applications where data availability and fault tolerance are critical. It is particularly suited to scenarios where losing access to the cache, even temporarily, could lead to significant disruptions.

- Large, Distributed Applications: Applications that handle large volumes of data across distributed environments benefit from the scalability and resilience provided by this caching strategy.

For a better understanding, please have a look at the following diagram:

Partition-Replica Cache Use When:

- Both Read and Write Scalability Are Important: This configuration combines the benefits of partitioning (scalability and load balancing) with replication (availability and fault tolerance).

- High Availability and Fault Tolerance Are Critical: By ensuring that each partition has one or more replicas, this setup provides a robust failover mechanism.

- Balanced Performance is Necessary: This approach is efficient for scenarios where the application demands good performance for both read and write operations without sacrificing data availability.

Typical Scenarios:

- High-traffic websites that cannot afford any downtime and require immediate failover capabilities.

- Distributed applications that need to manage large volumes of data across different geographical locations while ensuring that each location has quick access to data.

Advantages of NCache Clustering in Distributed Caching:

The following are the advantages of Clustering

- Load Balancing: Load balancing in an NCache cluster helps distribute client requests and data across all the servers in the cluster. This prevents any single server from becoming a bottleneck, thereby enhancing the cache’s overall throughput and efficiency.

- Scalability: NCache clusters are highly scalable. You can add more servers to the cluster without downtime, and NCache automatically starts to balance the data across the new server configuration. This is crucial for maintaining performance as application demands increase.

- Data Synchronization: Synchronization mechanisms in NCache ensure that all changes to the cache are consistently propagated across all nodes in the cluster. This includes updates, deletions, and additions, as well as maintaining data integrity and consistency across the cluster.

- High Availability: High availability is achieved through data replication and automatic failover mechanisms in a cluster. If a node fails, the cluster can continue to operate either by shifting load to the remaining nodes or activating standby nodes, depending on the configuration.

- Dynamic Cluster Membership: NCache clusters support dynamic membership, meaning servers can be added or removed from the cluster on the fly. This is useful for maintenance operations and scaling the cluster in response to varying loads without affecting the cache availability.

In the next article, I will discuss How to Download and Install NCache in Windows Operating Systems. In this article, I explain the NCache Cluster and Its Types in Distributed Caching. I hope you enjoy this article, NCache Cluster and Its Types in Distributed Caching.