Back to: ASP.NET Core Web API Tutorials

Caching in ASP.NET Core Web API

In this article, I will provide a Brief Introduction to Caching in ASP.NET Core Web API Applications. Please read our previous articles discussing Logging in ASP.NET Core Web API with Examples.

What is Caching?

Caching is the process of storing frequently accessed data in a temporary storage (known as the cache) so that subsequent requests for that data can be served faster. Instead of recalculating or re-fetching data from the database or an external API every time, the application retrieves the data from the cache if available. This reduces unnecessary computation and network calls, improving the performance and responsiveness of applications and reducing the load on database servers.

What is Caching in ASP.NET Core?

In ASP.NET Core, caching involves storing frequently requested data, such as database query results, API responses, or processed data, in memory or distributed cache stores. Subsequent requests for the same data can be served more efficiently without repeatedly querying the database or re-executing expensive operations. Thus, caching improves overall application performance and enhances user experience.

ASP.NET Core provides built-in caching mechanisms, making integrating caching into Web API projects easy. ASP.NET Core supports various caching strategies, such as:

- In-Memory Caching: Stores data on the same server where the application is running.

- Distributed Caching: Enables caching across multiple servers using external cache stores like Redis or SQL Server.

- Response Caching: Caches entire HTTP responses, particularly useful for web APIs that return data that doesn’t change frequently.

Why is Caching Important in ASP.NET Core Web API?

Caching is essential for improving the performance and scalability of ASP.NET Core Web APIs. It reduces the time required to serve client requests, decreases the load on backend services, and helps manage server resources more efficiently.

Without caching, every request may trigger time-consuming operations, such as querying the database or calling external services. This can slow down response times and increase costs. Caching enables APIs to deliver faster responses, handle larger traffic volumes, and maintain a more consistent user experience. The following are the advantages we will get with Caching in ASP.NET Core Web API:

- Performance and Speed: By reducing round-trips to the database or external services, our API can respond to client requests more quickly.

- Scalability: When fewer resources are consumed, the server can handle more concurrent requests with the same hardware.

- Cost Reduction: If the database or third-party services charge per request or resource usage, especially in cloud-based environments where such operations may incur additional charges, caching can reduce costs by reducing the number of network calls.

- Enhanced User Experience: Faster response times lead to a better user experience, which is key for user retention and satisfaction.

In a Web API context, where response time is critical, effective caching strategies can significantly improve application performance. Consider an example where our application contains data such as a list of products, countries, states, cities, or configuration settings. Since this data does not change frequently, multiple users might request it simultaneously.

Without Caching:

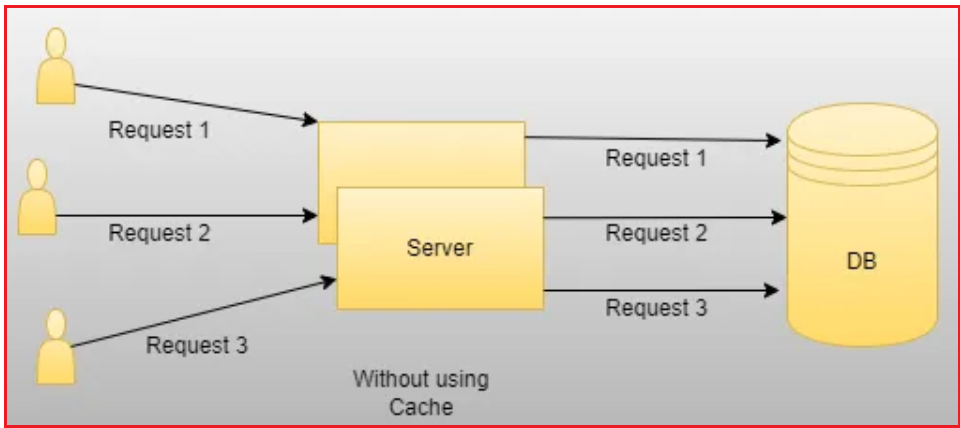

Each incoming request triggers the full processing pipeline. For example, a database query might be executed every time a user requests a particular resource, resulting in repeated I/O operations, increased load on the database server, and higher resource consumption, which can lead to slower performance under high traffic. That means every time a client requests data, the application must fetch it from the primary data source (e.g., a database or external API), perform any necessary computations, and then return the result. For a better understanding, please look at the following diagram:

Without Caching

- The client sends a request to the Web API.

- Web API receives the request and queries the database or an external service.

- The database processes the query and returns the data.

- Web API returns data to the client.

- Every request involves a call to the database or external service, even if the requested data is the same.

With Caching:

With Caching, when a request is made, the application first checks if the data is available in the cache. If the data is found (a cache hit), it is returned immediately, bypassing the need for a full data fetch. If the data is not in the cache (a cache miss), it is retrieved from the primary data source and stored in the cache for future requests. By serving cached data, the system reduces the number of calls to the backend systems. This leads to faster response times and more efficient resource usage. For a better understanding, please look at the following diagram:

With Caching

- The client sends a request to the Web API.

- Web API checks if the requested data is already in the cache.

- If the data exists in the cache (cache hit), return it immediately from the cache.

- If the data does not exist in the cache (cache miss), make a call to the database or external service, retrieve the data, and store it in the cache.

- Web API returns the data to the client.

- The data is quickly retrieved from the cache for subsequent requests, bypassing expensive operations.

With caching, the Web API only fetches data from the database or external service on the first request or when the cache expires/invalidates.

What is a Cache Hit and a Cache Miss?

Cache Hit: When a requested item is found in the cache, it’s referred to as a cache hit. The application can quickly retrieve and return the data without performing additional processing. No external or database call is required, resulting in a very fast retrieval.

Cache Miss: When a requested item is not found in the cache, it’s called a cache miss. In this case, the application must fetch the data from the original source (e.g., database or external API) and store it in the cache for future use.

What are the Different Types of Caching Available in ASP.NET Core?

ASP.NET Core provides several caching mechanisms to optimize application performance:

In-Memory Caching:

This type of caching stores data in the Main Memory of the Web Server where the application is run. It is fast and easy to implement, making it ideal for single-server scenarios where we do not need to share cache across multiple servers.

- Stores data in the web server’s memory where the application is running.

- It is simple to set up using the IMemoryCache interface.

- It offers fast read/write performance but is limited to a single server instance.

- Ideal for scenarios where data does not need to be shared across multiple servers.

- Please click here to learn how to Implement In-Memory Caching in ASP.NET Core Web API with one Real-time application.

Distributed Caching:

This type of caching stores data in an External Cache Server, such as Redis, NCache, or SQL Server, making it suitable for applications running on multiple servers or in a load-balanced environment. Distributed caching ensures consistent caching across all application instances.

- Stores data in an external cache server (e.g., Redis, SQL Server, and NCache) accessible by multiple application instances.

- Suitable for applications running on multiple servers (load-balanced environments).

- Implemented using the IDistributedCache interface.

- Ensures data consistency across different instances of the application.

- Please click here to learn how to Implement Distributed Caching using Redis in ASP.NET Core Web API with one Real-time application.

Response Caching:

Response caching stores the responses of HTTP requests to improve response times for similar requests. By caching the entire response, ASP.NET Core can serve repeated requests without executing the same processing logic each time.

- Caches the entire HTTP response for specific endpoints.

- Allows clients or proxies to serve cached content for subsequent requests.

- Typically configured using ResponseCache attribute in controllers or via middleware.

- It is used when responses are identical for multiple requests and do not contain sensitive or user-specific data.

- Please click here to learn how to Implement Response Caching in ASP.NET Core Web API with one Real-time application.

Note: Though Response Caching can be beneficial, in the context of ASP.NET Core Web APIs that often serve dynamic data, we will more frequently use In-Memory or Distributed data caching for frequently accessed data.

Differences Between In-Memory Caching and Distributed Caching

The choice between in-memory and distributed caching should be determined by your application’s specific architecture and scalability needs. Please consider the following pointers when deciding between In-Memory and Distributed Caching in ASP.NET Core Web API Applications.

In-Memory Caching

- Storage Location: Stored in the application’s local memory.

- Scope: Limited to the single server where the application instance is running.

- Performance: Very fast since it resides in the application’s memory.

- Data Sharing: Data is not shared across multiple instances.

- Usage: Best suited for small-scale applications or scenarios where data sharing across servers is not required.

- Setup Complexity: Simple to implement.

- Limitations: This is not ideal for applications running on multiple servers or load-balanced environments since the cache is not shared across instances.

Distributed Caching

- Storage Location: Stored in an external cache server.

- Scope: Shared across multiple servers or application instances using external distributed cache solutions like Redis or SQL Server.

- Performance: It is slightly slower than in-memory caching due to network overhead but still significantly faster than querying the primary data source.

- Data Sharing: Data is shared across all instances of the app.

- Usage: Best for large-scale, distributed applications where consistency and data sharing across instances are critical.

- Setup Complexity: More complex; requires additional infrastructure.

- Advantages: Highly scalable and ideal for applications running in a load-balanced or cloud environment.

Distributed Caching Techniques Supported by ASP.NET Core Web API:

ASP.NET Core Web API supports multiple distributed caching solutions. Some of the popular distributed caching solutions are as follows:

Redis Cache:

Redis is an open-source, in-memory data store commonly used as a distributed cache. It supports a variety of data structures (strings, hashes, lists, sets, sorted sets, etc.), making it highly flexible. Redis is known for its high performance, scalability, and advanced features such as persistence, replication, and clustering. It is Ideal for applications requiring rapid read/write operations, session storage, and real-time analytics.

SQL Server Cache:

This caching mechanism uses Microsoft SQL Server as the backing store. The Microsoft Extensions.Caching.SqlServer package provides an IDistributedCache implementation that allows developers to store cached data in a SQL Server database. It is used by organizations already invested in the Microsoft ecosystem that prefer using SQL Server for caching rather than introducing a new caching technology.

NCache:

NCache is a distributed caching solution designed for .NET applications. It provides both in-memory caching and a distributed architecture, enabling multiple application instances to share a single cache. NCache includes features like caching clusters, high availability, and various caching topologies (e.g., replicated, partitioned, client cache). Enterprises with .NET-heavy environments need a mature, full-featured distributed caching solution with robust support and clustering capabilities.

Memcached:

Memcached is an open-source, high-performance, in-memory caching system designed to speed up web applications by reducing database load. It’s relatively simple and lightweight, focusing on key-value storage without the more advanced data structures offered by Redis. It is used by applications requiring a simple, fast, and scalable key-value store without the need for complex caching features.

Key Differences Between Redis, SQL Server, NCache, and Memcached:

- Redis provides rich data structures, high throughput, and clustering support, making it suitable for complex caching scenarios and high-volume applications.

- SQL Server Cache offers a straightforward caching option for applications already using SQL Server, but it may not be as performant or feature-rich as dedicated caching systems.

- NCache provides a .NET-optimized distributed cache with enterprise-grade features but often involves licensing costs.

- Memcached is simpler and more lightweight than Redis or NCache, making it a good choice for straightforward caching needs.

Caching in ASP.NET Core Web API is essential for improving application performance and responsiveness. We can significantly reduce the number of backend requests by using caching techniques such as in-memory caching, distributed caching, or response caching, thereby decreasing server load and enhancing user experience. Choosing the appropriate caching strategy and type depends on the application’s requirements, scalability needs, and architecture.

In the next article, I will discuss How to Implement In-Memory Caching in ASP.NET Core Web API Applications with Examples. In this article, I explain Caching in ASP.NET Core Web API Application with Examples. I hope you enjoy this article, Caching in ASP.NET Core Web API.