Back to: ASP.NET Core Web API Tutorials

Difference Between In-Memory Caching and Distributed Caching in ASP.NET Core

In this article, I will discuss the Difference Between In-Memory Caching and Distributed Caching in ASP.NET Core Applications. Please read our previous articles discussing How to Implement Redis Cache in ASP.NET Core Web API Application with Examples.

Difference Between In-Memory Caching and Distributed Caching in ASP.NET Core

In-memory caching and distributed caching are two common caching strategies used in ASP.NET Core to enhance application performance and scalability by storing frequently accessed data in a cache rather than repeatedly fetching it from a slower data source like a database. Here’s a breakdown of the differences between these two types of caching:

In-Memory Caching in ASP.NET Core Web API

In-memory caching refers to storing cached data in the memory of the web server where the application is running. This type of caching is directly accessible by the application without any external calls, making it extremely fast. For a better understanding, please have a look at the following diagram:

Setup and Configuration

- Choose a Cache Provider: ASP.NET Core comes with a built-in in-memory cache provider, which is straightforward to use and doesn’t require external infrastructure like distributed caching solutions.

- Configure the Cache: Setup involves minimal configuration since it runs within the application’s process. You need to add it to the services collection in your Program.cs configuration.

- In-Memory Caching in ASP.NET Core: Typically utilizes IMemoryCache, which is part of ASP.NET Core’s own caching mechanisms.

Initialization in Program.cs

- Service Registration: In the Program.cs file, you register the in-memory caching service with the dependency injection (DI) container using the Services.AddMemoryCache() method. This method sets up the necessary services to use the in-memory cache.

- Configure Options: When registering the cache, you can optionally configure settings such as its size limit and expiration policies.

Usage in the Application

- Accessing the Cache: You can access the in-memory cache in your controllers or services through constructor injection. ASP.NET Core’s DI container will provide an instance of IMemoryCache where you can set and retrieve cached items.

- Storing Data: To store data, you use methods such as Set, CreateEntry, or the extension method GetOrCreate. You provide a key under which the data is stored and, optionally, set cache entry options like expiration times.

- Retrieving Data: Data can be retrieved using the Get method with the key. If the key exists, the cached data is returned; otherwise, a null value is returned. The GetOrCreate method is useful when you want to retrieve or create data if it doesn’t exist in the cache.

Cache Entry Options

- Absolute Expiration: Sets the time at which a cache entry will expire regardless of use.

- Sliding Expiration: This option extends the expiration time if the data is accessed within a given time frame. It is useful for data that should expire if not used for a specified period.

- Priority: Indicates the priority of keeping the entry in the cache during a memory pressure-triggered cleanup.

Eviction and Cleanup

- Memory Pressure: In-memory caching is directly affected by the available memory on the server. ASP.NET Core may evict cache entries under memory pressure conditions based on their set priority.

- Manual Eviction: Developers can manually remove entries from the cache when certain conditions are met, such as database changes that invalidate the cached data.

Advantages of In-Memory Caching:

- Speed: Since the data is stored directly in the application’s memory, access times are very quick.

- Performance Improvement: Reduces the need to retrieve data from slower databases, especially for frequently accessed data.

- Ease of Use: Integrates easily within the ASP.NET Core framework and requires no additional infrastructure.

Disadvantages of In-Memory Caching:

- Scalability: Since the data is stored in the memory of the server where the application is running, it does not scale out automatically in a web farm or cloud environment, unlike distributed caching.

- Data Volatility: Data in the in-memory cache can be lost if the web application restarts or crashes, which doesn’t happen with distributed caching solutions.

Use cases of In-Memory Caching:

In-memory caching is generally used for smaller or single-server applications where cache invalidation is not an issue and where ease of setup is a priority.

- Applications deployed on a single server or instances where each server can safely have its own isolated cache.

- Situations where latency is a critical factor and the fastest possible access to cached data is necessary.

- Environments where the workload and data size are predictable allow efficient memory management without the risk of overloading the server’s memory.

Distributed Caching in ASP.NET Core Web API

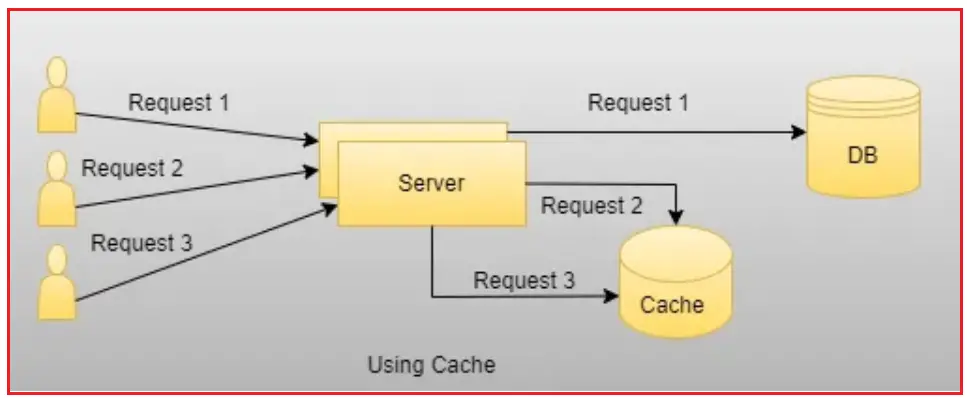

Distributed caching in ASP.NET Core Web API is a powerful feature that helps improve the performance and scalability of web applications by storing data in a cache that is shared across multiple servers or instances. This is particularly useful in environments where the application is deployed in a load-balanced cluster or across multiple geographical locations. For a better understanding, please have a look at the following diagram:

Setup and Configuration

- Choose a Distributed Cache Provider: ASP.NET Core supports several distributed cache providers, including Redis, SQL Server, and NCache. Redis is a popular choice due to its performance and features.

- Configure the Cache: This involves setting up the cache service in the application’s configuration file (e.g., appsettings.json) or through code. This includes specifying the cache provider, connection strings, and other necessary settings.

Initialization in Program.cs Class File

- Service Registration: In the Program.cs file, you register the distributed cache service in the dependency injection container. For example, if you are using Redis, you would use Services.AddStackExchangeRedisCache() to add and configure the Redis cache provider.

- Middleware Configuration: Optionally, you can configure middleware in the request pipeline that interacts with the cache, such as caching common responses.

Usage in the Application

- Storing Data: You can store data in the cache using an instance of IDistributedCache. This interface provides methods like SetAsync and Set to save data, where you specify a key and the data item.

- Retrieving Data: Data can be retrieved using methods like GetAsync and Get. If the data is available in the cache, it is returned quickly without needing to re-fetch from the primary data source (like a database).

- Expiration and Eviction: The cache can be configured to evict data automatically based on time-to-live settings or space constraints. This helps manage the cache size and ensures that outdated data is removed.

Considerations for Cache Invalidate

- Data Invalidation: It’s important to invalidate cache entries when the underlying data changes to avoid stale data issues. This can be done manually by removing or updating the cache entry when data is updated in the database.

- Consistency: Ensuring consistency across the cache and the database can be challenging, especially in high-throughput environments. Strategies like write-through or write-behind caching can be employed to address these challenges.

Advantages of Distributed Caching:

- Scalability: Because the cache is maintained outside the application servers, it can easily scale by adding more cache servers.

- Availability: The cache is maintained independently of the application servers, so it persists even if individual application servers are restarted.

- Improved Performance: Response times can be significantly reduced by reducing the number of round-trips to the database and serving data from the cache.

Disadvantages of Distributed Caching:

- Complexity: Implementing and managing a distributed cache adds complexity to the application architecture.

- Cost: Depending on the solution, there might be additional costs associated with hosting and managing the cache infrastructure.

Use Cases of Distributed Caching

Distributed Caching is preferred for large, distributed applications that require high availability and scalability, such as cloud-based applications and microservices that are load-balanced across multiple servers.

- It is ideal for microservices architecture or applications that are deployed across multiple servers or locations and require a consistent view of the cached data.

- Necessary for applications that require cache persistence across server restarts or deployments.

- This is useful when the application needs to scale dynamically and maintaining individual caches per instance would be inefficient or impractical.

Choosing the Right Caching Strategy:

- Evaluate Scalability Needs: If your application requires scaling out to multiple servers to handle load, distributed caching might be more appropriate.

- Assess Resilience Requirements: For applications where uptime and resilience are critical, distributed caching offers the advantage of surviving application restarts and maintaining a consistent state across deployments.

- Consider Latency and Performance: In scenarios where ultra-low latency is crucial, and the application can run effectively on a single server or a stable set of servers, in-memory caching might be the better choice.

- Check for Data Consistency Requirements: If your application instances must have consistent data views, distributed caching is essential.

In the next article, I will discuss NCache Clustering and Its Types in Distributed Caching. In this article, I explain the Difference Between In-Memory Caching and Distributed Caching in ASP.NET Core. I hope you enjoy this article, Difference Between In-Memory Caching and Distributed Caching in ASP.NET Core.