Back to: ASP.NET Core Web API Tutorials

Serilog, Elasticsearch, and Kibana in ASP.NET Core Web API

In this article, I will discuss Serilog, Elasticsearch, and Kibana in an ASP.NET Core Web API Application with Examples. Please read our previous article on the Differences Between Serilog and NLog in an ASP.NET Core Web API Application with Examples.

In modern ASP.NET Core Web API applications, logging is more than just writing messages to a file; it’s about understanding what’s happening inside your system in real time. Serilog, Elasticsearch, and Kibana together form a powerful observability stack that helps developers efficiently collect, search, and visualize application logs.

Serilog generates structured logs in JSON format, Elasticsearch stores and indexes them for lightning-fast search, and Kibana presents them in beautiful, interactive dashboards. This combination makes monitoring, debugging, and analyzing application behavior easier, faster, and more insightful, even for complex distributed systems.

What are Log Analyzing Tools?

Log Analyzing Tools are specialized software systems designed to help you collect, search, visualize, and analyze logs generated by your applications.

When your ASP.NET Core Web API or background services run, they continuously generate thousands (sometimes millions) of log entries, covering events like user logins, API requests, warnings, errors, and performance metrics.

Reading these raw log files manually is almost impossible; that’s where log analyzing tools come in. They act like a Central Control Room for developers and DevOps teams, allowing you to:

- Aggregate logs from multiple servers, APIs, and microservices.

- Search for specific events (e.g., “all 404 errors in the last 1 hour”).

- Filter logs by severity level, timestamp, user, or request ID.

- Create real-time dashboards and alerts to monitor system health and detect issues quickly.

Real-World Analogy: Just as a bank’s security control room where guards monitor dozens of CCTV cameras from a single screen, log analysis tools let developers monitor all applications and systems from a single dashboard.

Popular Log Analyzers:

- Serilog – Collects and formats logs in structured JSON format.

- Elasticsearch – Stores and indexes those logs for fast searching.

- Kibana – Visualizes the stored logs in real-time dashboards.

Together, they form a powerful, modern observability stack known as the ESK Stack (Elasticsearch, Serilog, and Kibana).

What Is Serilog?

Serilog is a popular, open-source structured logging framework built explicitly for .NET applications, including ASP.NET Core Web API. Unlike traditional logging systems that write plain text lines to a file, Serilog captures data in a Structured Format (JSON). This means your logs aren’t just messages; they’re well-defined key-value pairs (like database fields).

This structure enables advanced log management systems (such as Elasticsearch and Kibana) to intelligently analyze, store, and visualize logs.

Key Features:

- Structured Logging: Stores logs as JSON objects instead of plain text, making them machine-readable and easily searchable.

- Multiple Sinks: Supports many destinations (“sinks”) like console, text files, databases, cloud services, and Elasticsearch.

- Flexible Configuration: Logging levels, formats, and sinks can be managed directly from appsettings.json without touching code.

- Asynchronous Logging: Handles log writing on background threads, ensuring your application performance isn’t impacted.

- Deep Integration: Works seamlessly with Elasticsearch (for storage) and Kibana (for visualization), completing the end-to-end logging cycle.

Analogy:

Imagine Serilog as a Smart Personal Assistant in your application. It listens carefully to everything that happens (API requests, warnings, errors) and records it in a clean, well-organized way, noting who did what, when, and where. Then it hands this organized notebook over to Elasticsearch for storage and to Kibana for visualization.

What Is Elasticsearch?

Elasticsearch is an open-source, distributed search and analytics engine built on top of Apache Lucene. It’s designed to store and search large volumes of structured or unstructured data within milliseconds. You can think of it as the Google Search engine for your application logs, capable of searching across millions (or billions) of log entries instantly.

Key Features:

- Stores logs as JSON documents and automatically indexes them for ultra-fast search.

- Supports powerful queries for filtering, full-text search, and data aggregation.

- Commonly used for log analytics, metrics tracking, and real-time monitoring in production systems.

Analogy:

Think of Elasticsearch like a library where every book (log) is perfectly categorized and indexed. When you search for a keyword, Elasticsearch finds the exact book and page instantly, no manual browsing required. That’s exactly what Elasticsearch does for your application logs.

What Is Kibana?

Kibana is the Visualization and Dashboard Tool for Elasticsearch. It connects directly to your Elasticsearch cluster and allows you to explore, analyze, and visualize your log data through interactive charts, graphs, and tables.

Common Uses:

- Searching through logs interactively using filters and queries.

- Creating real-time dashboards showing API health, latency, and performance.

- Visualizing data trends using pie charts, bar graphs, and line charts.

- Setting up alerts for errors, downtimes, or performance bottlenecks.

Analogy:

If Elasticsearch is like a Database Engine, then Kibana is the Dashboard and Reporting Tool that makes the stored data visual, human-friendly, and actionable.

Why Do We Need Serilog, Elasticsearch, and Kibana?

Modern applications generate an enormous amount of data every second, including user requests, database operations, exceptions, API calls, and more. Without a structured logging and visualization system, tracking what’s happening inside your app becomes nearly impossible. Here’s how each component in the ESK Stack contributes to a complete, intelligent logging ecosystem:

Serilog → The Logger (Data Producer)

- Captures all events, errors, and performance details from our ASP.NET Core Web API.

- Converts raw logs into structured JSON format and sends them to Elasticsearch.

Elasticsearch → The Search Engine (Data Store)

- Stores all structured logs efficiently.

- Indexes them for instant search and correlation (e.g., find all 500 errors for Order API in the last 30 minutes).

Kibana → The Visualization Tool (Data Viewer)

- Displays Elasticsearch logs in a graphical and interactive format.

- Helps you monitor trends, discover issues early, and make data-driven decisions.

In Short:

- Serilog = “The Writer” – records logs in a structured format and sends them to storage.

- Elasticsearch = “The Brain” – stores and indexes logs for lightning-fast searches.

- Kibana = “The Eyes” – visualizes logs and metrics for real-time insight.

Together, they create a robust end-to-end monitoring and analytics system that helps you:

- Store and analyze all application logs from a single location.

- Search and filter issues instantly.

- Visualize performance and error trends in real time.

- Detect bottlenecks or failures before they affect end users.

Together, they give you a 360° view of your system’s health, enabling faster debugging, proactive monitoring, and smarter maintenance decisions.

Download, Install, Configure, and Verify Elasticsearch

Let us proceed and see how to download, install, configure, and run Elasticsearch locally on a Windows machine.

Download Elasticsearch

Please download and install Elasticsearch from the official website. First, visit the following URL.

https://www.elastic.co/downloads/elasticsearch

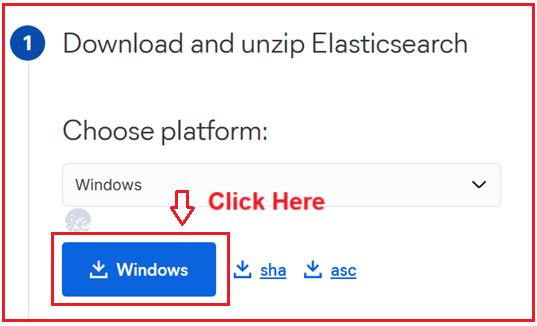

Once you visit the above URL, it will open the following page. From this page, download the required Setup based on your Operating System. As, I am using windows Operating System, so, I am selecting Windows and download the Elastic Search Zip file as shown in the below image.

Extract the ZIP file.

Once the download completes, extract the ZIP file contents to a location of your choice. To do so:

- Right-click on the downloaded ZIP file.

- Choose Extract All… or use a tool like 7-Zip / WinRAR.

- Extract it to a preferred folder, for example: D:\Elasticsearch

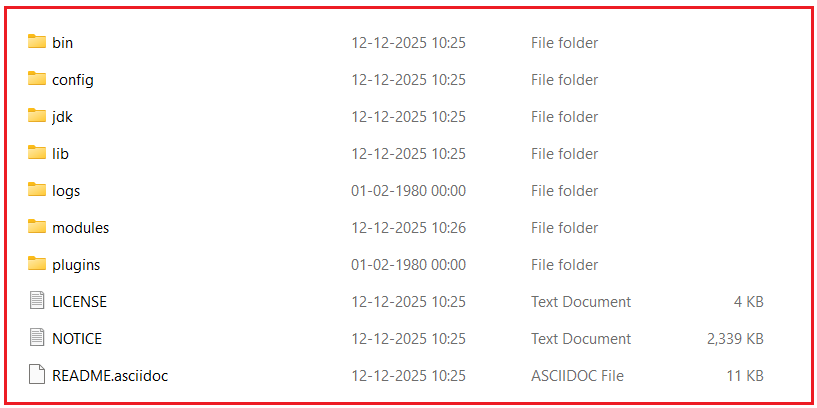

After extraction, you’ll get a folder like: D:\Elasticsearch\elasticsearch-9.2.2 and, here you will see several directories, including bin, config, data, and logs as shown in the below image.

Folder Structure Overview

Before starting, let’s quickly understand the important folders inside Elasticsearch:

- Bin: Contains startup scripts (elasticsearch.bat, elasticsearch-service.bat).

- config: Contains configuration files (elasticsearch.yml, jvm.options, log4j2.properties).

- data: Stores all indexed data (created automatically after the first run).

- logs: Stores Elasticsearch log files.

- lib: Contains internal libraries and dependencies.

- modules/plugins: Used for installing additional features or plugins.

This helps you understand where to look when configuring or troubleshooting Elasticsearch.

Configure Elasticsearch

Open the config directory (e.g., D:\Elasticsearch\elasticsearch-9.2.2\config\) and locate the elasticsearch.yml file. You can leave it as-is for the default settings, or modify specific parameters. If you need to change the IP or Port:

- network.host: 192.168.0.1

- http.port: 9200

Save your changes and close the file.

Run Elasticsearch

- Step 1: Open Command Prompt: Press Start → type “cmd” → and press enter.

- Step 2: Navigate to the Elasticsearch bin directory. Please execute the following two commands.

- D:

- cd Elasticsearch\elasticsearch-9.2.2\bin

- Step 3: Start Elasticsearch: Run the command: elasticsearch.bat

For a better understanding, please look at the following image:

Note: The first startup may take 1–2 minutes as Elasticsearch initializes indexes and security credentials. It will take some time, then start Elasticsearch and bind it to localhost:9200 by default.

Note: Don’t close this window; leave Elasticsearch running.

Reset Password for Elastic User in Elasticsearch on Windows

Open a Second Command Prompt (Administrator)

Open another Command Prompt window side by side. Navigate again:

- D:

- cd Elasticsearch\elasticsearch-9.2.2\bin

Run the Password Reset Tool

Now that Elasticsearch is running, execute this command:

- elasticsearch-reset-password.bat -u elastic -i

Explanation:

- -u elastic → specifies the user you want to reset (elastic)

- -i → interactive mode (so you can type your own password)

Then, it will ask you.

- Please confirm that you would like to continue [y/N]

- Please enter y.

Then, you’ll see,

- Please enter the new password for the [elastic] user.

- Type your new password (for example): MyStrongP@ssword123

Note: Whatever password you enter will not be visible in the UI.

Then it will ask again:

- Please confirm the new password for the [elastic] user:

- Re-enter the same password. After that, you should see:

- Password for user [elastic] successfully changed.

The above steps are shown below:

Verify the Password

- Open your browser and go to: http://localhost:9200

- It will prompt for credentials:

-

- Username: elastic

- Password: MyStrongP@ssword123

-

Once logged in, you will see a JSON response like this:

This JSON response gives information about our Elasticsearch node, including the version, cluster name, and more.

Create a Kibana Service Account Token:

Open Command Prompt

Navigate to your Elasticsearch bin folder:

- D:

- cd Elasticsearch\elasticsearch-9.2.2\bin

Create the Service Account Token

Run the following command:

- elasticsearch-service-tokens.bat create elastic/kibana kibana-token

Expected output:

SERVICE_TOKEN elastic/kibana/kibana-token = AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjp5RTFqNUVKNFRtS2Y4bmRhSGJEQUJR

Copy the long string value (AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjp5RTFqNUVKNFRtS2Y4bmRhSGJEQUJR). That is your token.

Download, Install, Configure, and Run Kibana Locally on Windows OS

Kibana is a data visualization and dashboarding tool for Elasticsearch. Let’s go through the complete process step by step, from download to verification.

Step 1: Download Kibana

Visit the Official Kibana Download Page: Open your web browser and go to: https://www.elastic.co/downloads/kibana

Choose the Correct Version

- Make sure the Kibana version matches your Elasticsearch version. (Example: If you installed Elasticsearch 9.2.2, download Kibana 9.2.2.)

- In the Windows section, click the ZIP file to download it, as shown in the image below.

Step 2: Extract the Package

Once the download completes:

- Locate the downloaded ZIP file.

- Right-click → Extract All → choose a destination folder, e.g.: D:\Kibana

After extraction, you will see the Kibana directory structure (like bin, config, data, etc.) as shown in the image below.

Step 3: Configure Kibana

Now we will make a few essential configurations before starting Kibana.

Open the Configuration File

- Navigate to: D:\Kibana\kibana-9.2.2\config

- Open the file named kibana.yml using a text editor (e.g., Notepad, Notepad++, or Visual Studio Code).

Set Elasticsearch Host

Find the line that defines Elasticsearch hosts and update it to point to your local Elasticsearch instance.

Remove the # (to uncomment it) and modify as follows:

- elasticsearch.hosts: [“http://localhost:9200”]

This tells Kibana to connect to Elasticsearch running on port 9200.

Below it, please uncomment the elasticsearch.serviceAccountToken and update it as follows. This is the service token that we generated earlier.

- elasticsearch.serviceAccountToken: “AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjp5RTFqNUVKNFRtS2Y4bmRhSGJEQUJR”

Other Optional Settings

You may also adjust a few optional settings to suit your needs. Please uncomment the following:

- server.port: 5601

- server.host: “localhost”

These define where Kibana runs (default: http://localhost:5601).

Generate Keys Using Kibana CLI:

Open Command Prompt

- Press Win + R, type cmd, and press Enter.

- Navigate to the bin folder by executing the following two commands one by one:

-

- D:

- CD Kibana\kibana-9.2.2\bin

-

Then, Run:

-

- kibana-encryption-keys.bat generate

It should generate the following encryption keys:

- xpack.encryptedSavedObjects.encryptionKey: 1381a9adc72d53132bd02a561e45df32

- xpack.reporting.encryptionKey: 126950f4a1448d9355d943f773530d63

- xpack.security.encryptionKey: 4545c54c6a79c694d44c3a15d2a40ce9

Add these to kibana.yml

Paste the generated values at the bottom of your kibana.yml file. These should be kept secret and not changed later; otherwise, saved sessions, reports, and encrypted objects will break.

# ================== Custom Encryption Keys ================== xpack.encryptedSavedObjects.encryptionKey: 1381a9adc72d53132bd02a561e45df32 xpack.reporting.encryptionKey: 126950f4a1448d9355d943f773530d63 xpack.security.encryptionKey: 4545c54c6a79c694d44c3a15d2a40ce9

Step 4: Run Kibana

Now let’s start Kibana manually.

Open Command Prompt

- Press Win + R, type cmd, and press Enter.

- Navigate to the bin folder by executing the following two commands one by one:

-

- D:

- CD Kibana\kibana-9.2.2\bin

-

Start Kibana

-

- Run: kibana.bat

You’ll see logs appearing in the console. Wait until you see a message like:

- Server running at http://localhost:5601

This means Kibana has started successfully.

Step 5: Access Kibana

Now, open your browser and visit: http://localhost:5601

It will ask you to enter your username and password. Provide the following credentials:

- Username: elastic

- Password: MyStrongP@ssword123

Then, click on the Login button as shown in the image below:

Once you click on the Login button, you will see the Kibana Welcome Screen. Click on “Explore on my own” to start using Kibana and explore your Elasticsearch data visually!

Once you click on the Explore on my own button, it will open the Welcome Page as shown in the image below:

Configuring Serilog to use Elasticsearch:

First, we need to install the Elasticsearch package for Serilog. Please note that we have already installed other required packages for Serilog.

- Install-Package Serilog.Sinks.Elasticsearch

Modify appsettings.json to include Elasticsearch Sink:

Please modify your appsettings.json file with the Serilog settings. This configuration tells Serilog to write logs to both the File and the Elasticsearch instance running on http://localhost:9200. It also specifies the minimum logging level.

{

"ConnectionStrings": {

"DefaultConnection": "Server=LAPTOP-6P5NK25R\\SQLSERVER2022DEV;Database=OrderManagementDB_Evening;Trusted_Connection=True;TrustServerCertificate=True;"

},

"Serilog": {

// Global Minimum Log Level Configuration

"MinimumLevel": {

// "Information" is the default logging level for the entire app.

// Debug and Verbose (Trace) logs will be ignored unless overridden.

"Default": "Information",

// Override logging behavior for specific namespaces.

"Override": {

// Reduce noise from Microsoft framework logs → log only Warning and above.

"Microsoft": "Debug",

// Only log System-level errors (ignore info/debug/verbose).

"System": "Debug"

}

},

// Sink Configuration - Where the Logs Will Be Written

"WriteTo": [

{

// Async Sink - wraps all child sinks for high performance.

// Prevents blocking the request thread due to heavy logging.

"Name": "Async",

"Args": {

"configure": [

// CONSOLE SINK (ASYNC + DEBUG LEVEL)

{

"Name": "Console",

"Args": {

// Only Debug and higher logs will appear in the console.

"restrictedToMinimumLevel": "Debug",

// Defines how the console log line will be formatted.

"outputTemplate": "{Timestamp:yyyy-MM-dd HH:mm:ss} [{Level:u3}] [{Application}/{Server}] [CorrelationId: {CorrelationId}] {Message:lj}{NewLine}{Exception}"

}

},

// File Sink (Async + Daily Rolling + Retention Policy)

{

"Name": "File",

"Args": {

// Only Information+ logs should be written to file.

"restrictedToMinimumLevel": "Debug",

// Log file location and naming pattern.

// Example: Logs/OrderManagementAPI-20251210.txt

"path": "Logs/OrderManagementAPI-.txt",

// Create a new file daily.

"rollingInterval": "Day",

// Keep only last 30 log files (older ones auto-deleted).

"retainedFileCountLimit": 30,

// Maximum size of each log file = 10 MB.

"fileSizeLimitBytes": 10485760,

// When file exceeds size limit → create a new file automatically.

"rollOnFileSizeLimit": true,

// Log message format in the text file.

"outputTemplate": "{Timestamp:yyyy-MM-dd HH:mm:ss} [{Level:u3}] [{Application}/{Server}] [CorrelationId: {CorrelationId}] [{SourceContext}] {Message:lj} {NewLine}{Exception}"

}

},

// SQL Server Sink - Store Logs Inside Database Table

{

"Name": "MSSqlServer",

"Args": {

// Connection string used by MSSqlServer sink.

"connectionString": "Server=LAPTOP-6P5NK25R\\SQLSERVER2022DEV;Database=OrderManagementDB_Evening;Trusted_Connection=True;TrustServerCertificate=True;",

// Only "Warning" and above logs go to the database.

"restrictedToMinimumLevel": "Warning",

// Sink-specific configuration section.

"sinkOptionsSection": {

// Table name where logs will be inserted.

"tableName": "Logs",

// Serilog will auto-create the Logs table if missing.

"autoCreateSqlTable": true

}

}

},

// Elasticsearch sink

{

"Name": "Elasticsearch",

"Args": {

"nodeUris": "http://elastic:MyStrongP%40ssword123@localhost:9200", // URL where Elasticsearch is running. @ is replaced with %40

"autoRegisterTemplate": true, // Automatically registers an index template in Elasticsearch

"indexFormat": "aspnetcore-logs-{0:yyyy.MM.dd}" // Defines the naming pattern for the Elasticsearch index. (daily indexes in this example).

}

}

]

}

}

],

// Global Enricher Properties - added to Every Log Event

"Properties": {

// Application Name (helps identify microservice in distributed systems)

"Application": "App-OrderManagementAPI",

// Machine/Server Name (useful in load-balanced or cloud environments)

"Server": "Server-125.08.13.1"

}

},

"AllowedHosts": "*"

}

Open Kibana

Navigate to: http://localhost:5601

Once it loads, log in using:

- Username: elastic

- Password: <your-password>

You will land on the Kibana Home Page.

Step-1: Create a Data View

This tells Kibana which Elasticsearch index your logs are stored in.

Steps:

- From the left sidebar, go to Management → Stack Management.

- Click Data Views (in older versions, it may say Index Patterns).

- Click Create Data View to open the Create Data View screen.

- Configure the following:

-

- Name – give it a friendly name: ASP.NET Core Logs

- Give Index pattern as: aspnetcore-logs-*

- Keep the Timestamp field as @timestamp.

-

- Click the Save data view to Kibana button (bottom right).

Done! Kibana now knows where your log data lives.

Step-2: View Logs in “Discover”

- After saving, go to the left sidebar → Analytics → Discover.

- In the top-left dropdown, select your new data view

-

- ASP.NET Core Logs

-

- You should now see your structured log entries, each with fields like:

-

- @timestamp

- Level

- Application

- Server

- MessageTemplate

- SourceContext

- CorelationId

- OrderId, CustomerId, ElapsedMs, etc.

-

- Adjust the time filter (top-right) to “Last 15 minutes” or “Last 24 hours,” depending on when you ran your API calls.

Step-3: Filter, Search, and Inspect

You can now query logs using KQL:

All Information Logs

level=information

Shows all normal process flow messages — like API requests, successful inserts, or controller startup.

All Warning Logs

level=warning

Shows issues that didn’t stop execution but need review (e.g., invalid data, slow responses).

All Error Logs

level=error

Shows all failures or exceptions in your application.

Logs from Specific Controllers

All Logs from Orders Controller

sourcecontext=OrderManagementAPI.Controllers.OrdersController

Displays every log generated by the OrdersController — across all levels.

Only Warning Logs from Orders Controller

level=warning AND sourcecontext=OrderManagementAPI.Controllers.OrdersController

Helps you find non-critical but notable issues coming from the Orders module.

Only Errors from Orders Controller

level=error AND sourcecontext=OrderManagementAPI.Controllers.OrdersController

Shows exceptions or failures related to orders — e.g., failed inserts, invalid order IDs.

Logs by Correlation ID (Track a Single Request)

All Logs for a Particular Request

correlationid=87b2d9aa-c1d8-42e3-b245-f5d48b908b1c

Traces everything that happened during one API request — across controllers and services.

Only Errors for That Request

level=error AND correlationid=87b2d9aa-c1d8-42e3-b245-f5d48b908b1c

Pinpoints exactly where that request failed.

Only Info Logs for That Request

level=information AND correlationid=87b2d9aa-c1d8-42e3-b245-f5d48b908b1c

Shows normal activity for that same request (e.g., validation passed, record inserted).

Logs by Execution Time (Performance Analysis)

All Slow Requests (> 1 second)

ElapsedMs >1000

Finds API calls that took more than 1 second — great for spotting performance bottlenecks.

Very Fast Requests (< 100 ms)

ElapsedMs < 100

Confirms lightweight API endpoints are working efficiently.

Logs by Business Data

All Logs Related to a Customer

customerid=5

Shows every log involving Customer ID 5 – orders, lookups, or validation issues.

All Logs Related to a Specific Order

orderid=101

Traces everything related to Order ID 101 – creation, updates, and retrieval.

Integrating Serilog, Elasticsearch, and Kibana into an ASP.NET Core Web API enables developers to move beyond plain-text logging into a smarter, data-driven monitoring ecosystem. With this setup, every log becomes a searchable, visual insight that improves troubleshooting, performance tracking, and proactive system health management.

In the next article, I will discuss how to Implement Caching in an ASP.NET Core Web API Application. In this article, I explain Elasticsearch in an ASP.NET Core Web API application with examples. I hope you enjoy this article, “How to Integrate Elasticsearch in an ASP.NET Core Web API Application.“

Registration Open – Mastering Design Patterns, Principles, and Architectures using .NET

Session Time: 6:30 AM – 08:00 AM IST

Advance your career with our expert-led, hands-on live training program. Get complete course details, the syllabus, and Zoom credentials for demo sessions via the links below.

- View Course Details & Get Demo Credentials

- Registration Form

- Join Telegram Group

- Join WhatsApp Group