Back to: Data Science Tutorials

Max Pooling and ReLU Activations in CNN

In this article, I am going to discuss Max Pooling and ReLU Activations in CNN. Please read our previous article where we discussed Overfitting and Regularization in CNN.

Max Pooling and ReLU Activations in CNN

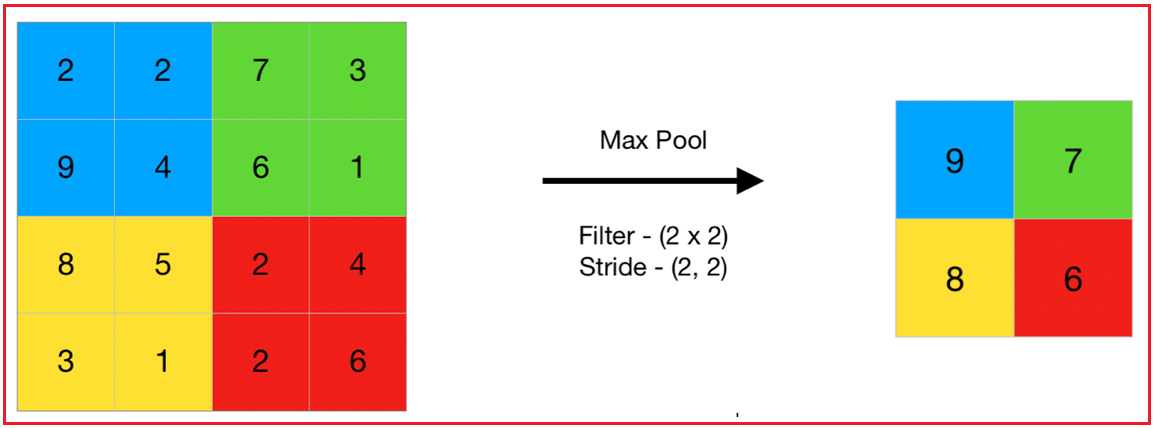

Max pooling is a down-sampling procedure that is performed on the feature maps produced by ConvNet’s convolutional layers. It is used to minimize the spatial size of feature maps, which aids in lowering computational burden and controlling overfitting.

Max pooling works by applying a max filter to the feature maps, which moves over the map in a sliding window way, taking the maximum value within each window. The size of the window and the stride with which it is applied are both adjustable hyperparameters.

Max pooling has the effect of retaining the most important information in the feature maps and discarding less important information. It also has the effect of making the feature maps more robust to small translations and deformations in the input data.

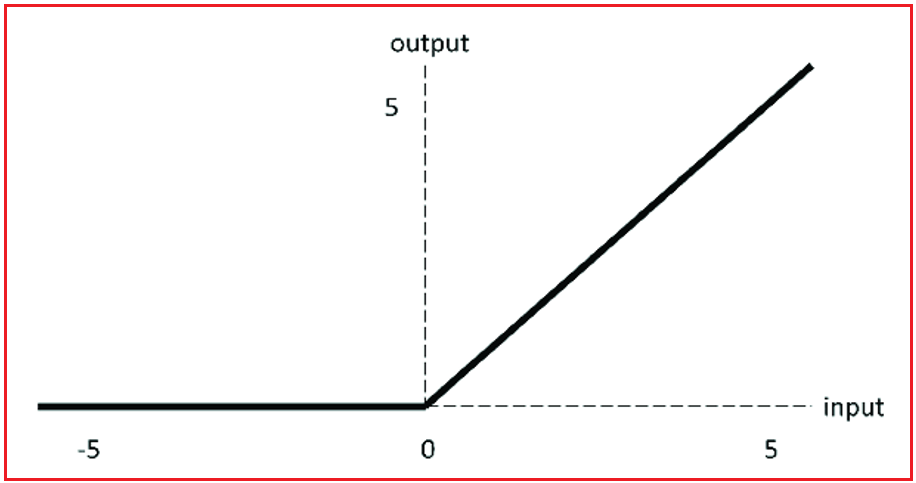

ReLU (Rectified Linear Unit): ReLU is a frequent activation function in ConvNets. It accepts a single value as input and returns a value that is either the same as the input (if positive) or zero (if the input is negative).

The ReLU function is defined as f(x) = max(0, x), where x is the input value. It has the effect of setting the input threshold to zero and is used to add nonlinearity to the ConvNet.

There are various advantages of using ReLU over other activation functions. It is easy to calculate and does not saturate, which means the output numbers don’t tend to be zero or infinity. This makes deep ConvNet training easier since gradients may propagate back through the network without being attenuated.

In ConvNets, ReLU is frequently used in conjunction with max pooling. This combination of techniques enables ConvNet to learn robust features that are insensitive to tiny translations and deformations in the input data.

In the next article, I am going to discuss Pooling vs Flattening in CNN. Here, in this article, I try to explain Max Pooling and ReLU Activations in CNN. I hope you enjoy this Max Pooling and ReLU Activations in the CNN article. Please post your feedback, suggestions, and questions about this article.