Back to: Data Science Tutorials

Introduction to Data

In this article, I am going to give a brief introduction to Data. Please read our previous article, where we gave a brief introduction to Data Science. As part of this article, we are going to discuss the following pointers.

- What is Data?

- Classification and objectives of Data Classification

- Types of Data

- Data Quality, Changes and Data Quality Issues

- What is Data Architecture

- Components of Data Architecture

- OLTP vs OLAP

- How is Data Stored?

What is Data?

Since the invention of computers, data plays a crucial role which can be computer information that is either stored in storage or transmitted. So, what is data? Data can be different types of information which is usually in formatted form. In simple terms, data is a collection of facts like words, numbers, observations, or any description of things.

Classification of Data

It is the process of organizing the data according to the relevant categories which will make it easy to locate and retrieve the data whenever required. Classification of data is done on the basis of tagging which is easily searchable and trackable. Data can be categorized according to similar groups or data which have common characteristics.

“Classification is the process of arranging data into sequences according to their common characteristics or separating them into different related parts.” Prof. Secrist

Understanding raw data is not easy and becomes a very hectic task to do analysis on it. The raw information is straightforward to discover and recover with a well-planned data analysis system. This is especially useful for legal discovery, risk management, and compliance purposes. Written data classification methods and guidelines should specify what levels and measures the organization will use to organize data and clarify the input stewardship duties of people within the company.

Following the design of a data classification scheme, the security standards that specify proper approaching practices for each division and the storage criteria that determine the data’s lifecycle demands should be discussed.

Objectives of data classification

The primary goals of data classification are as follows:

- Consolidate a large amount of data in such a way that similarities and differences can be quickly identified.

- As a result, figures can be organized into sections based on shared characteristics.

- Comparison can be easily performed.

- Convenient to analyze data or part of data.

- Increases reliability of a specific set of data

- To highlight the most important aspects of the data in a flash.

Types of Data

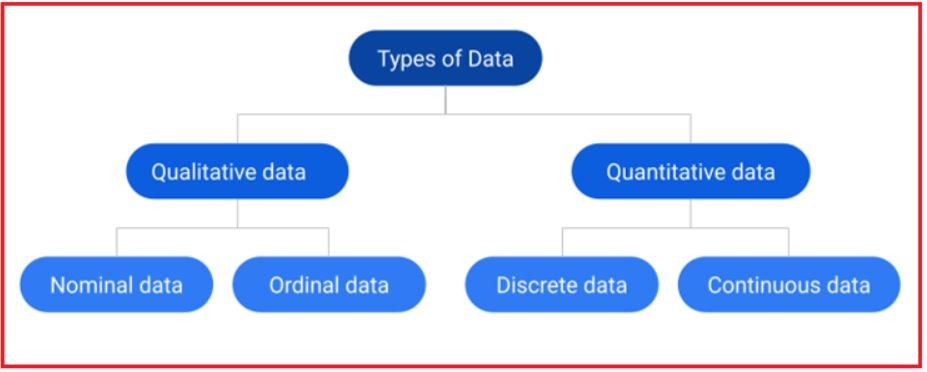

Data Types are an important statistical concept that must be understood in order to correctly apply statistical measurements to your data and thus correctly conclude certain assumptions about it. In data science, data is the important aspect where it is being extracted, segregated, and presented in the form of statistics. While segregation is performed, data is mostly categorized as per the groups or characteristics. There are different types of data which are explained below:

Understanding the various data types is a necessary prerequisite for performing Exploratory Data Analysis (EDA), because certain statistical measurements can only be applied to specific data types. Let’s discuss the above tree briefly:

Qualitative Data

It is also known as categorical data. It can’t be counted or it does not have any numerical value. They can be some kind of characteristics or attributes or any descriptions which cannot be computed or calculated. It involves categorical variables which describe features like home town, person’s gender, etc. Some qualitative data hold numerical values but they can’t be measured. For example, date of birth, pin code, etc. Qualitative data is further divided into:

Nominal Data: Nominal values are used to label variables that have no quantitative value because they represent discrete units. Consider them to be “labels.” It is worth noting that nominal data has no order. As a result, changing the order of its values has no effect on the meaning.

For example:

Question: What is your gender?

a: Male

b: Female

Ordinal Data: It is the part of Qualitative data which are listed in an ordered manner. This indicates the order of the list which is tagged with a number. However, these numbers are not counted mathematically. They can’t be measured but can be analyzed. Ordinal numbers denote discrete and ordered units. It is thus nearly identical to nominal data, with the exception that the ordering is important.

For example: What is your highest educational qualification?

a: High School

b: Graduate

c: Post Graduate

d: Doctorate

It is important to note that the distinction between High School and High Graduate is not the same as the distinction between Graduate and Postgraduate. The main limitation of ordinal data is that the differences between the values are not well understood. As a result, ordinal scales are commonly used to assess non-numerical characteristics such as happiness, customer satisfaction, and so on.

Quantitative Data

The data which can be represented or calculated are quantitative data. For example, the number of boys studying in a school from your class which gives a total count of boys. This information can be numerically calculated. Quantitative data is further divided into:

Discrete Data: If the values are distinct and separate, we refer to the data as discrete. In other words, discrete data is data that can only take on certain values. This kind of information cannot be measured, but it can be counted. It essentially represents information that can be classified into a category. This type of data consists of numbers that can be counted but cannot be measured. For example, the number of days in the year, the age of an individual, etc.

Continuous Data: Since Continuous Data represents measurements, its values cannot be counted but can be measured. A person’s height, for example, can be described using intervals on the real number line. The data which can take any value. The numeric values can be meaningfully broken into smaller units. This can either be uncountably finite or uncountably infinite.

Interval Data: Interval values represent ordered units that differ by the same amount. As a result, we refer to interval data when we have a variable that contains ordered numeric values and we know the exact differences between the values.

As an example, consider a feature that contains the temperature of a specific location. The issue with interval value data is that there is no “true zero.” In our example, this means that there is no such thing as no temperature. We can add and subtract interval data, but we can’t multiply, divide, or calculate ratios. Many descriptive and inferential statistics cannot be used because there is no true zero.

Ratio Data: Ratio values are also ordered units that differ by the same amount. Ratio values are similar to interval values, with the exception that they have an absolute zero. Height, weight, and length are all good examples.

Data Collection Types

Data can be classified into four types based on how it was collected:

- Observational Data

- Experimental Data

- Simulation Data

- Derived Data

The type of research data you collect may influence how you manage it. For example, data that is difficult or impossible to replace (for example, the recording of an event at a specific time and location) necessitates additional backup procedures to reduce the risk of data loss.

Observational Data

Observational data is obtained by observing a behaviour or activity. It is gathered through methods such as human observation, open-ended surveys, or the use of an instrument or sensor to monitor and record data, such as the use of sensors to monitor noise levels at the Minneapolis/St. Paul International Airport.

Experimental Data

When a variable is changed, experimental data are collected through active intervention by the researcher to produce and measure change or to create difference. Experimental data usually allows the researcher to determine a causal relationship and can be extrapolated to a larger population. This type of data is frequently reproducible, but it can be costly to do so.

Simulation Data

Simulation data is generated by using computer test models to simulate the operation of a real-world process or system over time. For instance, to forecast weather, economic models, chemical reactions, or seismic activity. This method is used to try to predict what might happen if certain conditions were met.

Derived Data

Derived data is created by transforming existing data points, often from different data sources, into new data using a transformation such as an arithmetic formula or aggregation. Combining area and population data from the Twin Cities metro area, for example, to generate population density data.While this type of data can usually be replaced if it is lost, it can be time-consuming (and possibly costly) to do so.

Data Quality

Data quality is the process of cleaning and provides quality data to the organization. High-quality data helps organizations to make strategic decisions. The process for making the quality data is Data Quality Management (DQM).

Characteristics of data quality

To determine the quality of data, the following are the factors that play a huge role.

- Data should be accurate, precise, and error-free.

- Availability of data is a major part of data quality. Data should be available to the right person at the right time.

- Data should not have gaps in it. It should complete, information should be complete.

- Reliable data should be presented. It should not be ambiguous, vague, or contradicting.

- Outdated data are useless. It’s a waste of time to use outdated data. So, the data should be provided on time.

Data Quality issues

Data quality is critical in business. You are aware of this. But do you know what it takes to provide high-quality data? We’ll look at how data quality issues can arise.

In a nutshell, data quality refers to a data set’s ability to serve whatever purpose a company intends to use it for. Sending marketing materials to customers could be one of those requirements. It could be researching the market in order to develop a new product feature. It could be keeping a database of customer data to assist with product support services, or any number of other objectives.

Here are six common ways for data quality errors to creep into your organization’s data operations, even if you generally follow best practises for managing and analysing data:

Manual Data Entry Errors:

Humans are prone to making mistakes, and even a small data set containing manually entered data by humans is likely to contain errors. Typos, data entered in the wrong field, missed entries, and other data entry errors are almost unavoidable.

OCR Errors:

Machines, like humans, can make mistakes when entering data. When organisations need to digitise large amounts of data quickly, they frequently rely on Optical Character Recognition, or OCR, technology. OCR technology automatically scans images and extracts text from them.It can be very useful when, for example, you want to enter thousands of paper-printed addresses into a digital database so that you can analyse them. The issue with OCR is that it is almost always inaccurate.

If you OCR thousands of lines of text, some characters or words will almost certainly be misinterpreted – zeroes that are interpreted as eights, for example, or proper nouns that are read as common words because the OCR tool fails to distinguish between capital and lowercase letters. Similar issues arise with other types of automated machine entry.

Lack of Complete Information:

When compiling a data set, you frequently run into the issue of not having all of the information for each entry available. A database of addresses, for example, may be missing zip codes for some entries because the zip codes could not be determined using the method used to compile the dataset.

Ambiguous Data:

When creating a database, you may discover that some of your data is ambiguous, leaving you unsure whether, how, and where to enter it. For example, if you are creating a phone number database, some of the numbers you want to enter may be longer than the standard ten digits in a phone number in the United States.

Duplicate Data:

You may discover that two or more data entries are nearly or entirely identical. For instance, suppose your database contains two entries for a John Smith who lives at 123 Main St. Based on this information, it’s difficult to tell whether these entries are simply duplicates (perhaps John Smith’s information was entered twice by mistake) or if two John Smiths live in the same house.

Data Transformation Errors:

Converting data from one format to another can result in errors. As an example, suppose you have a spreadsheet that you want to convert to a comma-separated value, or CSV, file. Because data fields in CSV files are separated by commas, you may encounter problems when performing this conversion if some of the data entries in your spreadsheet contain commas.

Unless your data conversion tools are sufficiently intelligent, they will not be able to distinguish between a comma that is supposed to separate two data fields and one that is an internal part of a data entry. This is a simple example; things become much more complicated when complex data conversions are required, such as converting a mainframe database designed decades ago to NoSQL.

Correcting data quality errors

These are the kinds of data quality errors that are extremely difficult to avoid. In fact, the best way to approach data quality issues is to accept them as unavoidable.

You don’t have data quality issues because your data management process is flawed. It is because the types of data issues described above are impossible to avoid even with the best-run data operation.

What is Data Architecture?

A data architecture is a set of rules, policies, standards, and models that govern and define the type of data collected, as well as how it is used, stored, managed, and integrated within an organisation and its database systems. It offers a formal approach to creating and managing data flows and how they are processed across an organization’s IT systems and applications.

Data architecture is a broad term that encompasses all processes and methodologies that address data at rest, data in motion, data sets, and how these relate to data dependent processes and applications. It includes the primary data entities, data types, and sources required by an organisation for data sourcing and management.

Enterprise data architecture is made up of three distinct layers or processes:

- Conceptualization/business model: All data entities are included, and a conceptual or semantic data model is provided.

- Model logical/system: Provides a logical data model and defines how data entities are linked.

- Physical/technology model: Provides a data mechanism for a specific process and functionality, or describes how the actual data architecture is implemented on the underlying technology infrastructure.

Benefits of Data Architecture

Following are some of the benefits of having modern data architecture.

- Organizations have scattered data that needs to be accurate to get business insights. To organize and integrate this data, good data architecture is required. With well-designed architecture, your organization may be on the way to innovation and creativity.

- Different data formats are a challenge for organizations in today’s market. A good data architecture enables us to generate different data formats.

Components of Data Architecture

For better understanding of the components of data architecture, please have a look at the below image.

The common components of data architecture are:

Data Pipeline:

The data pipeline describes how data moves from point A to point B; from collection to refinement; and from storage to analysis. It covers the entire data movement process, from where the data is collected, such as on an edge device, to where and how it is moved, such as via data streams or batch processing, to where the data is moved to, such as a data lake or application.

The data pipeline should seamlessly transport data to its destination, allowing the business to flow smoothly. If the pipeline is clogged, quarterly reports may be missed, KPIs may go unnoticed, user behaviour may not be processed, ad revenue may be lost, and so on. Good pipelines can be an organization’s lifeblood.

It used to be that trustworthy team members served as endpoints for transmitting this information from one location to another. There are reliable software systems that move data around in today’s world. A good pipeline will transport your data from its origin to its destination in a timely and secure manner.

Cloud Storage

Cloud computing refers to a variety of classifications, types, and architecture models. This networked computing model has changed the way we work—you’ve probably already used the cloud. But the cloud isn’t just one thing; cloud computing can be divided into three broad categories:

- The term “public cloud” refers to cloud computing that is delivered over the internet and shared by multiple organisations.

- Private cloud computing is cloud computing that is solely dedicated to your organisation.

- Any environment that uses both public and private clouds is referred to as a hybrid cloud.

APIs

An API is a method of communication between a requester and a host that is typically accessed via an IP address. The API can provide users with a variety of information, including:

You want to share some information. You want to provide a function.

In short, the term “microservice” refers to the architecture of the software, whereas “API” refers to how the microservice is exposed to a consumer.

AI and ML Models

Artificial intelligence is a term used to describe the process of imbuing an entity with intelligence. AIs are created by humans. Engineers can create an AI that acts as the phone system’s operator instead of hiring teams of people to answer phone calls. To handle all incoming phone calls, an artificial intelligence can be created and used.

Data Streaming

Businesses may have thousands of data sources that are piped to various destinations. The data, which is typically in small chunks, can be processed using stream processing techniques.

Data streaming was previously reserved for only a few industries, such as media streaming and stock exchange financial values. It is now being used in every business. Data streams enable organisations to process data in real-time, allowing them to monitor all aspects of their operations.

Kubernetes

Google open-sourced the Kubernetes Project in 2014 after using it to run production workloads at scale for more than a decade. Kubernetes allows you to run dynamically scaling, containerized applications while also managing them via an API.

Kubernetes has emerged as the industry standard for running containerized applications in the cloud, with all of the major cloud providers (AWS, Azure, GCE, IBM, and Oracle) now offering managed Kubernetes services.

Cloud Computing:

Cloud computing is a term that is referred to as storing and accessing the data and computing services over the internet. It doesn’t store any data on the hard disk of your personal computer. The data can be anything such as files, images, documents, audio, video, and more.

It is the on-demand availability of computer services like servers, storage, networking, databases, etc. The main purpose of cloud computing is to give access to data centers to many users. In cloud computing, users can access data from a remote server.

Real Time Analytics

Data analytics is a broad term that encompasses the concept and practise (or, perhaps, science and art) of all data-related activities. The primary goal is for data experts, such as data scientists, engineers, and analysts, to make it simple for the rest of the organisation to access and comprehend these findings.

Data that is left unprocessed has no value. Rather, it is what you do with that data that adds value. Data analytics encompasses all of the steps you take, both manually and automatically, to discover, interpret, visualise, and tell the storey of patterns in your data to drive business strategy and outcomes.

Difference between OLTP and OLAP

The two terms look similar but refer to different kinds of systems. Online transaction processing (OLTP) captures, stores, and processes data from transactions in real-time. Online analytical processing (OLAP) uses complex queries to analyze aggregated historical data from OLTP systems.

- The difference between OLTP and OLAP is that OLTP is an online transaction system, whereas OLAP is an online data retrieval and analysis system.

- Online transactional data becomes the data source for OLTP. However, the various OLTP databases serve as the source of data for OLAP.

- The primary operations of OLTP are insert, update, and delete, whereas the primary operation of OLAP is to extract multidimensional data for analysis.

- OLTP transactions are short but frequent, whereas OLAP transactions are longer and less frequent.

- OLAP transactions take longer to process than OLTP transactions.

- OLAP queries are more complex than OLTP queries.

- Tables in an OLTP database must be normalised (3NF), whereas tables in an OLAP database are not required to be normalised.

- As OLTPs frequently execute transactions in the database, in case any transaction fails in the middle it may harm data’s integrity and hence it must take care of data integrity. While in OLAP the transaction is less frequent hence, it does not bother much about data integrity.

| Parameters | OLAP | OLTP |

| Functionality | OLAP is an online database query management system | OLTP is an online database modifying system |

| Characteristic | It is characterized by a large volume of data | It is characterized by large numbers of short online transactions |

| Method | It uses the data warehouse | It uses traditional DBMS |

| Source | Different OLTP databases become the source of data for OLAP | Its transactions are the sources of data |

| Data Integrity | The OLAP database does not get frequently modified. Hence, data integrity is not an issue | OLTP database must maintain data integrity constraint |

How is Data Stored?

Computer data storage is a complicated subject, but it can be divided into three basic processes. First, data is converted to simple numbers that a computer can easily store. Second, the numbers are stored in the computer by hardware. Third, programmes or software organise the numbers, move them to temporary storage, and manipulate them.

Binary Numbers

In a computer, every piece of data is stored as a number. Letters, for example, are converted to numbers, and photographs to a large set of numbers indicating the colour and brightness of each pixel. After that, the numbers are converted to binary numbers. Traditional numbers have ten digits, ranging from 0 to 9, to represent all possible values.

Primary Storage Devices

The hard disc drive is the primary data storage device in most computers. It consists of a spinning disc or discs with magnetic coatings and heads that can read and write magnetic data in the same way that cassette tapes do. In fact, early home computers stored data on cassette tapes. Binary numbers are represented on the disc by a series of tiny areas that are magnetised either north or south.

Temporary Storage

Long-term data storage is accomplished through the use of drives, discs, and USB keys. There are numerous areas on the computer for short-term electronic data storage. Small amounts of data are temporarily stored in a keyboard, printer, and motherboard and processor sections. Larger amounts of data are stored temporarily in memory chips and video cards.

In the next article, I am going to give you a brief introduction to Big Data. Here, in this article, I try to give you an overview of Data and I hope you enjoy this Introduction to Data article.

Registration Open – Microservices with ASP.NET Core Web API

Session Time: 6:30 AM – 8:00 AM IST

Advance your career with our expert-led, hands-on live training program. Get complete course details, the syllabus, registration, and Zoom credentials for demo sessions via the links below.