Back to: Data Science Tutorials

Dropout Layer in CNN

In this article, I am going to discuss the Dropout Layer in CNN. Please read our previous article where we discussed Pooling vs Flattening in CNN.

Dropout Layer in CNN

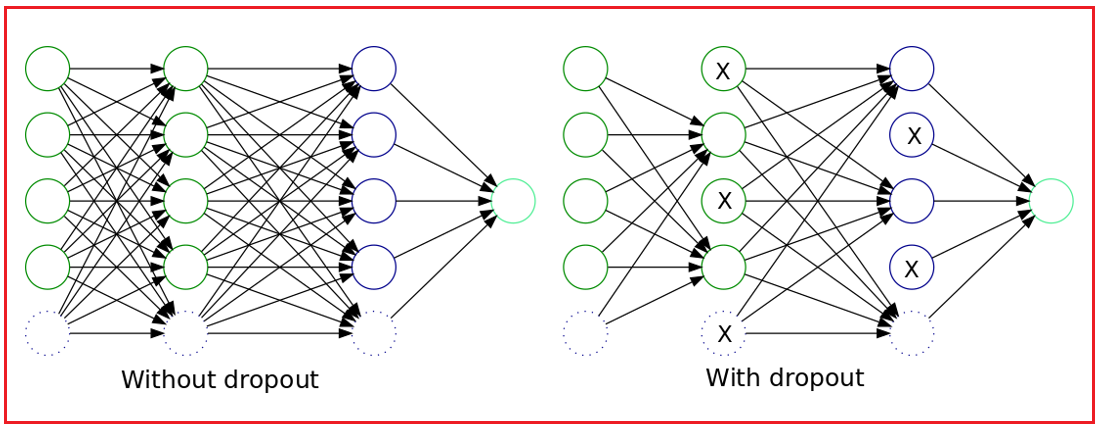

A Dropout layer is another prominent feature of CNN. The Dropout layer acts as a mask, eliminating some neurons’ contributions to the subsequent layer while maintaining the functionality of all other neurons. If we apply a Dropout layer to the input vector, some of its features are eliminated; however, if we apply it to a hidden layer, some hidden neurons are eliminated.

Because they avoid overfitting the training data, dropout layers are crucial in the training of CNNs. If they are absent, the first set of training samples has an excessively large impact on learning. As a result, traits that only show in later samples or batches would not be learned:

Let us imagine that during training, we display a CNN ten images of a circle one after the other. If we later show CNN an image of a square, it will not understand that straight lines exist and will be very perplexed. By incorporating Dropout layers into the network’s architecture to minimize overfitting, we may avoid these situations.

Strides and Zero Padding

In Convolutional Neural Networks, two ideas that are frequently used are strides and zero padding (ConvNets).

Stride:

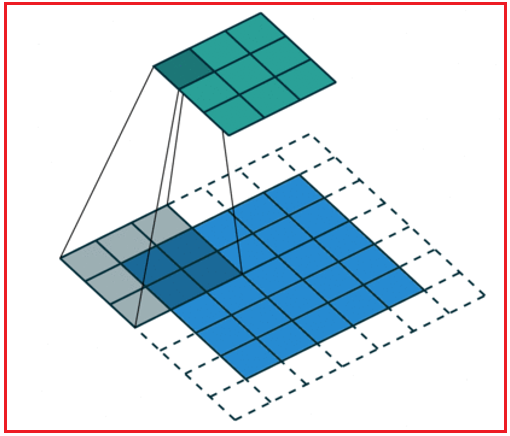

ConvNet’s convolutional layers apply a set of filters to the input data in a sliding window approach while also convolving the filters with the input data. The stride is the separation between the filters and the input data.

The stride controls how far the filters slide across the input data. Because the filters are moving more quickly and covering fewer input data, a higher stride leads to smaller output size. As the filters move more slowly and cover more of the input data, a smaller stride results in a bigger output size. A hyperparameter called stride can be adjusted to manage ConvNet’s processing load and output size.

Zero Padding:

Before applying filters to the input data in a ConvNet, a border of zeros is added around the data. The input data’s spatial size is increased, which might be advantageous for a variety of reasons.

The input data’s spatial dimension should be preserved as one reason to adopt zero padding. Without zero padding, as the filters move across the input data, the spatial dimension of the output shrinks as the filters cover less of the input data. The filters can cover the same amount of input data as they slide over it by placing a border of zeros around it, which keeps the output’s spatial size constant.

Utilizing zero padding also helps you manage the output’s size. Even if the stride and filter size would typically result in a variable size, it is feasible to make an output with a specific size by placing a border of zeros around the input data. Zero padding is a hyperparameter that can be adjusted to manage the ConvNet’s computational load and output size.

In the next article, I am going to discuss Building a Real World Convolutional Neural Network for Image Classification. Here, in this article, I try to explain the Dropout Layer in CNN. I hope you enjoy this Dropout Layer in the CNN article. Please post your feedback, suggestions, and questions about this article.

Registration Open – Microservices with ASP.NET Core Web API

Session Time: 6:30 AM – 8:00 AM IST

Advance your career with our expert-led, hands-on live training program. Get complete course details, the syllabus, registration, and Zoom credentials for demo sessions via the links below.