Back to: Data Science Tutorials

Lifecycle of a Data Science Project

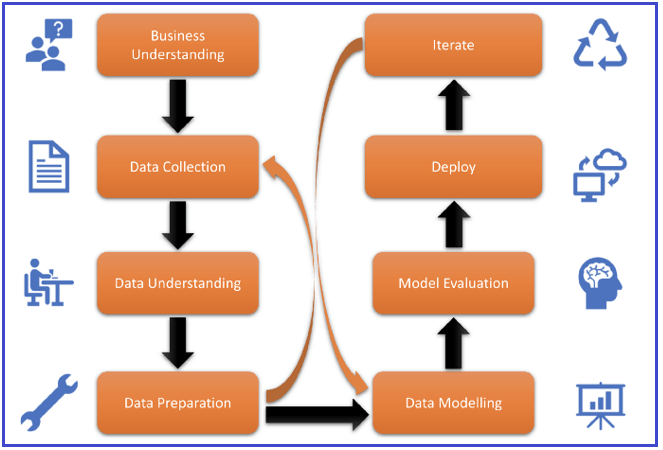

In this article, I am going to discuss the Lifecycle of a Data Science Project. Please read our previous article, where we discussed Why Data Scientists are in Demand. At the end of this article, you will understand the Lifecycle of a Data Science Project.

Lifecycle of a Data Science Project

Data science has come a long way since the term was coined in the 1990s. When tackling a data science problem, experts adhere to a set structure. Carrying out data science projects has almost become an algorithm.

All too often, the temptation is to skip the methodology and get right to solving the problem. However, doing so undermines our best intentions by failing to lay a solid foundation for the entire effort. Following the steps, on the other hand, usually gets us closer to the problem we’re trying to solve.

1. Business Understanding

Despite the fact that access to data and computing power have both increased dramatically in the last decade, an organization’s success is still largely dependent on the quality of questions it asks of its data set. The amount of data collected and compute power allocated are less important differentiators.

This is referred to as the Business Understanding step by IBM Data Scientist John B. Rollins. In the beginning, Google would have asked, “What constitutes relevant search results?” As Google expanded its search engine business, it began to display advertisements. The correct question would have been, “Which ads are relevant to a user?”

- How much compute (EC2) and storage (S3) space could Amazon lease out during lean periods?

- What percentage of the time do Uber drivers actually drive? How consistent is their income?

- What is the average occupancy of mediocre hotels, according to Oyo Hotels?

- Alibaba — What are our warehouse profits per square foot?

All of these questions must be answered before we can embark on a data science journey. After we’ve asked the correct question, we’ll move on to data collection.

2. Data Collection

If the recipe is to ask the right questions, then data is your ingredient. Once you have a clear understanding of the business, data collection becomes a matter of breaking the problem down into smaller components. The data scientist must understand which ingredients are required, how to obtain and collect them, and how to prepare the data to achieve the desired result.

For example, the type of data required by Amazon would be the Count of computational servers that are idle during a lean period. The number of storage servers that were idle during the lean period. The amount of money spent on machine maintenance. At the time, the number of startups in the United States was increasing.

Amazon could easily determine how much it can use based on the data presented above. In reality, when Amazon responded to these questions, Bezos was relieved to learn that this was a critical need. This resulted in the creation of AWS.

How to Find and Collect?

Amazon engineers were tasked with keeping track of server operations logs. Only after conducting market research to determine how many people would benefit from pay-per-use servers did they proceed.

3. Data Understanding and Preparation

We’ve already gathered some information. We learn more about the data in this step and prepare it for further analysis. The data understanding section of the data science methodology provides an answer to the following question: Is the information you gathered representative of the problem to be solved?

Assume Uber wanted to know if people would be interested in becoming drivers for them. Assume they gathered the following information for this purpose.

- Their current earnings

- Cab companies charge them a percentage cut.

- The number of rides they complete and the amount of time they spend sitting idle

- Fuel prices

While numbers 1–4 help them understand more about the drivers, number 5 doesn’t really explain anything about the drivers. Fuel costs have an impact on Uber’s overall model, but they don’t help them answer their original question.

Many people look at data statistics such as mean, median, and so on to better understand the data. People also plot data and examine its distribution using plots such as histograms, spectrum analysis, population distribution, and so on.

We prepare our data for further analysis once we have a better understanding of it. Preparation typically entails the following steps.

- Missing data handling

- Correcting Incorrect Values

- Getting rid of duplicates

- Organizing the data so that it can be fed into an algorithm

- Feature Engineering

Data preparation is analogous to washing vegetables to remove surface chemicals. Data collection, data understanding, and data preparation can account for up to 70% – 90% of total project time.

4. Modelling

Modelling is the stage in the data science methodology where the data scientist gets to taste the sauce and determine whether it’s spot on or needs more seasoning. Data modelling is used to discover patterns or behaviours in data. These patterns can help us in one of two ways:

- Descriptive modelling, such as recommender systems, predicts that if a person liked the movie Matrix, they will also like the movie Inception.

- Predictive modelling, which involves making predictions about future trends, such as linear regression, can help us predict stock exchange values.

In the world of machine learning, modelling is divided into three stages: training, validation, and testing. If the mode of learning is unsupervised, these stages change. In any case, once the data has been modelled, we can derive insights from it. This is the point at which we can finally begin evaluating our entire data science system.

Model evaluation, which takes place at the end of the modelling process, characterises the end of the modelling process.

- Accuracy — How well the model performs, i.e. how accurately it describes the data.

- Relevance — Does it provide an answer to the original question you set out to answer?

5. Deployment and Iteration

Finally, all data science projects must be put into practice in the real world. The deployment could be via an Android or iOS app, similar to cred, or via a WebApp, similar to moneycontrol.com, or as enterprise software, similar to IBM Watson.

An iterative process is used in data science projects. You continue to repeat the various steps until you have fine-tuned the methodology to your specific case. As a result, the majority of the preceding steps will occur concurrently. Python and R are the most popular data science programming languages.

Data Acquisition

Software programmes are written in general-purpose programming languages such as Assembly, BASIC, C, C++, C#, Fortran, Java, LabVIEW, Lisp, Pascal, and others typically control data acquisition applications. Data loggers are common names for stand-alone data acquisition systems.

There are also open-source software packages that include all of the tools required to collect data from various, typically specific, hardware devices. These tools are derived from the scientific community, where complex experiments necessitate quick, flexible, and adaptable software. Those packages are typically tailor-made, but more general DAQ packages, such as the Maximum Integrated Data Acquisition System, can be easily customised.

In the next article, I am going to discuss the Open Sources of Data. Here, in this article, I try to explain the Life Cycle of a Data Science Project in detail and I hope you enjoy this Life Cycle of a Data Science Project article.