Back to: Data Science Tutorials

Deep Neural Networks

In this article, I am going to discuss Deep Neural Networks. Please read our previous article where we discussed Gradient Descent in Artificial Neural Networks.

Deep Neural Networks

A deep neural network (DNN), or deep net for short, is a neural network that has some degree of complexity, often at least two layers. Deep neural networks use advanced math modeling to analyze input in complex ways.

However, it is best to see deep neural networks as an evolution to fully comprehend them. Before deep nets existed, a few things had to be constructed.

Machine learning needed to be developed first. ML is a framework for automating statistical models like a linear regression model (via algorithms) to improve prediction accuracy. A single model that offers predictions about something is referred to as a model. Those forecasts have some degree of accuracy. A model that learns—machine learning—takes all of its incorrect predictions and modifies the model’s weights to produce a model with fewer errors.

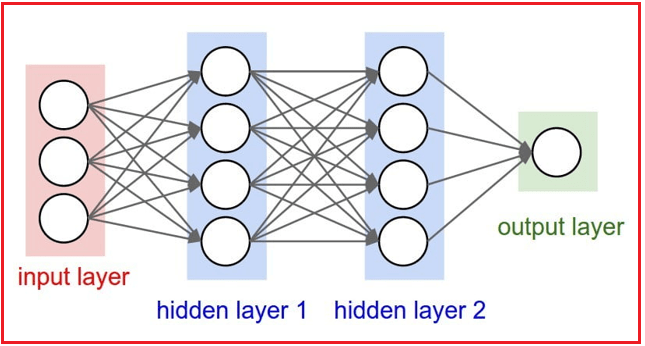

Artificial neural networks were created as a result of the learning process involved in building models. The hidden layer is used by ANNs to store and assess the importance of each input to the output. The hidden layer keeps track of the significance of each input and links the significance of input combinations together.

Therefore, deep neural nets make use of the ANN component. Why not stack more and more of these onto each other and gain even more advantage from the hidden layer, they argue, if it works so well at refining a model (because each node in the hidden layer forms associations and grades the importance of the input to determining the output)?

The deep net, therefore, contains numerous hidden levels. A model’s layers are said to be “deep” if there are several of them.

But is a substantial depth always required?

Given that great depth has drawbacks, depth is a crucial question to examine. For instance, a deeper network increases sequential processing and delays and makes parallelization more challenging. As a result, it is less suitable for applications that demand quick response times.

Why do Deep Networks give better accuracy?

A model’s performance can improve in accuracy thanks to deep nets. They enable a model to receive a collection of inputs and produce a result. Copying and pasting a line of code for each layer is all it takes to use a deep net. Any ML platform will work; all you have to do is type the numbers 2 or 2000 to tell the model to use two or 2,000 nodes in each layer.

The issue with employing these deep nets is how these models decide what to do. The explainability of a model is greatly diminished when using these straightforward tools.

A model can generate generalizations on its own on the Deep Net and store them in a secret layer called the “black box” afterward. Investigating the black box is challenging. Even if the values in the black box are known, a framework for comprehending them does not exist.

It is generally accepted that high-performance networks need to have substantial depth because depth improves a network’s representational capability and helps it acquire increasingly abstract properties. In fact, ResNets’ ability to support incredibly deep networks with up to 1000 layers is a major factor in their success. As a result, training models with a high degree of depth are increasingly used to achieve state-of-the-art performance, and the definition of “deep” has changed over time from “two or more layers” in the early days of deep learning to “tens or hundreds of layers” in modern models.

In the next article, I am going to discuss Building Multi-Layer Perceptron Neural Network Models. Here, in this article, I try to explain Deep Neural Networks. I hope you enjoy this Deep Neural Networks article. Please post your feedback, suggestions, and questions about this Deep Neural Networks article.

Registration Open – Mastering Design Patterns, Principles, and Architectures using .NET

Session Time: 6:30 AM – 08:00 AM IST

Advance your career with our expert-led, hands-on live training program. Get complete course details, the syllabus, and Zoom credentials for demo sessions via the links below.

- View Course Details & Get Demo Credentials

- Registration Form

- Join Telegram Group

- Join WhatsApp Group