Back to: SQL Server Tutorial For Beginners and Professionals

Lost Update Concurrency Problem in SQL Server with Examples

In this article, I will discuss the Lost Update Concurrency Problem in SQL Server with Examples. Please read our previous article discussing the Dirty Read Concurrency Problem in SQL Server with Examples. At the end of this article, you will understand what the Lost Update Concurrency Problem is, when this problem occurs, and how to overcome this problem in SQL Server with Examples.

What is the Default Transaction Isolation Level in SQL Server?

The default Transaction Isolation level in SQL Server is Read committed. That means if we do not specify any Transaction Isolation Level in SQL Server, then it is Read Committed Transaction Isolation Level by default. With the Read Committed Transaction Isolation Level, we will get all sorts of Concurrency Problems (Lost Update, Non-Repeatable Read, and Phantom Read) except the Dirty Read Concurrency Problem.

What is the Lost Update Concurrency Problem in SQL Server?

The Lost Update Concurrency Problem happens in SQL Server when two or more transactions are allowed to read and update the same data. That means multiple transactions try to update the same data concurrently. However, due to insufficient isolation or synchronization mechanisms, one transaction’s update overwrites another transaction’s update, leading to a “lost” update.

In SQL Server, this problem can arise when transactions operate on the same data concurrently without proper locking mechanisms or isolation levels. Consider the following scenario:

- Transaction A reads a row from a table.

- Transaction B also reads the same row.

- Transaction A updates the row and commits the changes.

- Transaction B updates the same row without checking if it has changed since it was read, effectively overwriting the changes made by Transaction A.

- Transaction B commits the changes, leading to the lost update, as the changes made by Transaction A are lost.

This issue can lead to data inconsistency and incorrect results in applications that rely on the data being updated accurately.

Understanding Lost Update Concurrency Problem

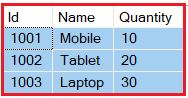

Let’s understand the Lost Update Concurrency Problem in SQL Server with an example. We are going to use the following Products table to understand this concept.

Please use the SQL script below to create and populate the Products table with the required sample data.

-- Create Products table

CREATE TABLE Products

(

Id INT PRIMARY KEY,

Name VARCHAR(100),

Quantity INT

)

Go

-- Insert test data into Products table

INSERT INTO Products values (1001, 'Mobile', 10)

INSERT INTO Products values (1002, 'Tablet', 20)

INSERT INTO Products values (1003, 'Laptop', 30)

Please look at the image below to understand the Lost Update Concurrency Problem in SQL Server. Here, we are creating two transactions that work with the same data.

As you can see in the above image, we have 2 transactions, i.e., Transaction 1 and Transaction 2. Transaction 1 starts first and is processing an order for 1 Mobile. It reads the quantity available for the mobile product as 10 from the database. At the same time, Transaction 2 started processing an order for 2 Mobiles. It also reads the Quantity available as 10 from the database. Transaction 2 makes the sale first and updates the quantity available in the database with a value of 8. Then, Transaction 1 completes the sale and silently overwrites the update to the quantity available as 9, while it should have been updated to 7. This is nothing but the Lost Update Concurrency Problems in SQL Server.

Example to Understand Lost Update Concurrency Problem in SQL Server

Open 2 instances of SQL Server Management Studio. From the first instance, execute the Transaction 1 code, and from the second instance, execute the Transaction 2 code.

Transaction 1 is processing an order for 1 Mobile, while Transaction 2 is processing an order for 2 Mobile. At the end of both transactions, the quantity of the Mobile should be 7 in the database, but we have a value of 9. This is because Transaction 1 silently overwrites the update which is made by Transaction 2. This is called the Lost Update Concurrency Problem in SQL Server.

Transaction1

In the first instance, copy and paste the following code. This is going to be our first transaction.

-- Transaction 1 BEGIN TRANSACTION DECLARE @QunatityAvailable int SELECT @QunatityAvailable = Quantity FROM Products WHERE Id=1001 -- Transaction takes 10 seconds WAITFOR DELAY '00:00:10' SET @QunatityAvailable = @QunatityAvailable - 1 UPDATE Products SET Quantity = @QunatityAvailable WHERE Id=1001 Print @QunatityAvailable COMMIT TRANSACTION

Transaction2

In the second instance, copy and paste the following code. This is going to be our second transaction.

-- Transaction 2 BEGIN TRANSACTION DECLARE @QunatityAvailable int SELECT @QunatityAvailable = Quantity FROM Products WHERE Id=1001 SET @QunatityAvailable = @QunatityAvailable - 2 UPDATE Products SET Quantity = @QunatityAvailable WHERE Id=1001 Print @QunatityAvailable COMMIT TRANSACTION

As you can see, we have not set any transaction isolation level in either transaction, which means it will use the default transaction isolation level, which is Read-committed Transactions. First, run the transaction 1 and immediately run the transaction 2 code. You will see that Transaction 2 is completed first, and then the second transaction is completed. Now, if you verify the Products table, you will see that the quantity for the mobile product is 9 by executing the query below.

SELECT * FROM Products WHERE Id = 1001

You will get the following output once you execute the above query.

How to Overcome the Lost Update Concurrency Problem?

Both Read Uncommitted and Read Committed Transaction Isolation Levels have the Lost Update Concurrency Problem in SQL Server. The other Isolation Levels, such as Repeatable Read, Snapshot, and Serializable, do not have the Lost Update Concurrency Problem. So, let’s run the above Transactions using any of the higher Transaction Isolation Levels, such as Repeatable Read, Snapshot, or Serializable. We will not get the lost update concurrency problem.

Let us see how to overcome the Lost Update Concurrency Problem using the Repeatable Read Transaction Isolation Level in SQL Server. The Repeatable Read Transaction Isolation Level uses additional locking on rows read by the current transaction, preventing those rows from being updated or deleted by other transactions. This solves the Lost Update Concurrency Problem. First, update the quantity to 10 for the mobile product by executing the update query below.

Update Products SET Quantity = 10 WHERE Id = 1001

Modify both transactions as shown below to use the Repeatable Read Isolation Level to solve the Lost Update Concurrency Problem.

Transaction 1

-- Transaction 1 SET TRANSACTION ISOLATION LEVEL REPEATABLE READ BEGIN TRANSACTION DECLARE @QunatityAvailable int SELECT @QunatityAvailable = Quantity FROM Products WHERE Id=1001 -- Transaction takes 10 seconds WAITFOR DELAY '00:00:10' SET @QunatityAvailable = @QunatityAvailable - 1 UPDATE Products SET Quantity = @QunatityAvailable WHERE Id=1001 Print @QunatityAvailable COMMIT TRANSACTION

Transaction 2

-- Transaction 2 SET TRANSACTION ISOLATION LEVEL REPEATABLE READ BEGIN TRANSACTION DECLARE @QunatityAvailable int SELECT @QunatityAvailable = Quantity FROM Products WHERE Id=1001 SET @QunatityAvailable = @QunatityAvailable - 2 UPDATE Products SET Quantity = @QunatityAvailable WHERE Id=1001 Print @QunatityAvailable COMMIT TRANSACTION

Now run Transaction 1 first and then run the second transaction, and you will see that Transaction 1 was completed successfully while Transaction 2 competed with the error. The transaction was deadlocked on lock resources with another process and has been chosen as the deadlock victim. Rerun the transaction.

Once you rerun Transaction 2, the Quantity will be updated correctly, as expected in the database table.

How Does Repeatable Read Transaction Isolation Level Work in SQL Server?

In SQL Server, the Repeatable Read isolation level employs a combination of shared and exclusive locks to ensure that data reads are repeatable across the same transaction. Here’s a breakdown of how each type of lock is used under the Repeatable Read isolation level:

Shared Locks

- Purpose: Used for read operations.

- Behavior: When a transaction reads data, SQL Server places shared locks on the read data. These locks prevent other transactions from modifying the data while the initial transaction is still in progress.

- Duration: Unlike the Read Committed isolation level, where shared locks are released immediately after the read operation, in Repeatable Read, shared locks are retained until the end of the transaction. This ensures that if the data is read multiple times within the same transaction, it remains unchanged, thereby making the reads repeatable.

Exclusive Locks

- Purpose: Used for write operations (INSERT, UPDATE, DELETE).

- Behavior: When a transaction modifies data, the SQL Server acquires exclusive locks on that data. These locks ensure that no other transactions can read or modify the data until the lock is released.

- Duration: Exclusive locks are held until the transaction is completed (committed or rolled back), which is consistent across all isolation levels to ensure data integrity during modifications.

Key Points for Repeatable Read

- Potential for Locking and Blocking: Because shared locks are held for the duration of the transaction, there is a higher potential for locking and blocking compared to lower isolation levels. This can affect concurrency and performance if many transactions access the same data.

- Does Not Prevent Phantom Reads: While Repeatable Read prevents Lost Update, Dirty Reads, and Non-Repeatable Reads concurrency issues, it does not prevent Phantom Reads. Phantom Reads is a situation where a transaction reads a set of rows that satisfy a search condition. Then, reading the same set again, additional rows that satisfy the condition are found because another transaction has inserted them. Preventing phantom reads requires the Serializable isolation level.

The following table shows the relation between the Concurrency Problem and the Transaction Isolation Level.

In the next article, I will discuss the Non-Repeatable Read Concurrency Problem in SQL Server with Examples. In this article, I try to explain the Lost Update Concurrency Problem in SQL Server with examples. I would like to have your feedback. Please post feedback, questions, or comments about this Lost Update Concurrency Problem article.

Registration Open – Microservices with ASP.NET Core Web API

Session Time: 6:30 AM – 8:00 AM IST

Advance your career with our expert-led, hands-on live training program. Get complete course details, the syllabus, registration, and Zoom credentials for demo sessions via the links below.