Back to: LINQ Tutorial For Beginners and Professionals

Performance Considerations and Best Practices of LINQ

In this article, I will discuss Performance Considerations and Best Practices of LINQ with Examples. Please read our previous article discussing Advanced PLINQ Features with Examples. At the end of this article, you will understand the following pointers:

- Measuring and Optimizing PLINQ Performance

- Understanding and Avoiding Common Problems in PLINQ Programming

- Profiling and Diagnosing PLINQ Applications

- Understanding and Using PLINQ Execution Modes

- Custom Partitioners for Optimal Data Distribution in LINQ

- Best Practices for Writing Efficient and Maintainable PLINQ Code

Measuring and Optimizing PLINQ Performance

Measuring and optimizing the performance of PLINQ (Parallel LINQ) involves a few key strategies and considerations. PLINQ is designed to make it easier to achieve parallelism in .NET applications by allowing LINQ queries to run on multiple processors simultaneously. While this can significantly speed up data processing, especially for CPU-bound tasks, it’s important to measure and optimize PLINQ performance carefully to ensure you’re getting the best out of your parallel queries. Here are some strategies and considerations for doing so:

Measure Performance Baselines:

Before optimizing, establish performance baselines. Use tools like BenchmarkDotNet or the Stopwatch class in .NET to measure the performance of your PLINQ queries compared to their LINQ counterparts. This gives you a clear picture of the performance gains or losses when switching to PLINQ.

Understand the Workload:

- CPU-bound vs. I/O-bound: PLINQ is most beneficial for CPU-bound operations. For I/O-bound operations, consider using asynchronous programming models instead.

- Small Workloads: For very small datasets or tasks that are completed very quickly, the overhead of parallelization may not be justified. Test to see if PLINQ is beneficial for your specific scenario.

Optimize Data Partitioning:

PLINQ automatically partitions data to distribute work across multiple threads. However, you can influence this behavior:

- Use WithExecutionMode(ParallelExecutionMode.ForceParallelism) to force PLINQ to parallelize queries that it would otherwise run sequentially.

- Consider manually partitioning data for very large datasets or for operations where PLINQ’s default partitioner may not partition the data efficiently.

Choose the Right Merge Options

The way PLINQ merges results from parallel operations back into a single result set can affect performance:

- Use WithMergeOptions(ParallelMergeOptions.NotBuffered) for better responsiveness in streaming scenarios.

- WithMergeOptions(ParallelMergeOptions.FullyBuffered) might be more efficient for queries that need to process all results before they can be consumed.

Manage Side Effects

Be cautious of side effects in PLINQ queries. Operations that modify external state or rely on the order of execution may not behave as expected in a parallel environment. Ensure that your query operations are pure functions when possible.

Monitor and Address Thread Pool Usage

PLINQ uses the .NET thread pool to manage its worker threads. Heavy use of PLINQ, especially with long-running queries, can starve other parts of your application that rely on the thread pool. Monitor thread pool utilization and adjust as necessary.

Use AsParallel Wisely

Not all LINQ queries benefit from parallelization:

- Apply AsParallel() selectively. Test to ensure that parallelization benefits your specific use case.

- Consider using AsSequential() to switch back to sequential execution for parts of the query that don’t benefit from parallelism.

Profiling and Diagnostics

Use profiling tools, such as Visual Studio’s Performance Profiler, to identify bottlenecks in PLINQ queries. Look for high CPU usage, excessive thread contention, or other performance issues that might indicate suboptimal parallelization.

Let’s create a simple .NET Console Application to demonstrate the concepts of measuring and optimizing PLINQ performance using an example. In this example, we’ll calculate the sum of squares of a large collection of numbers.

using System;

using System.Diagnostics;

using System.Linq;

namespace PLINQDemo

{

class Program

{

static void Main(string[] args)

{

// Generate a smaller collection of numbers to avoid overflow (1 to 1 million)

int[] numbers = Enumerable.Range(1, 1_000_000).ToArray();

// Measure performance baseline of sequential LINQ query

Stopwatch sequentialStopwatch = Stopwatch.StartNew();

long sequentialSum = numbers.Select(x => ComplexFunction(x)).Sum();

sequentialStopwatch.Stop();

Console.WriteLine($"Sequential sum: {sequentialSum}");

Console.WriteLine($"Sequential execution time: {sequentialStopwatch.ElapsedMilliseconds} ms");

// Measure performance of parallel LINQ (PLINQ) query

Stopwatch parallelStopwatch = Stopwatch.StartNew();

long parallelSum = numbers.AsParallel().Select(x => ComplexFunction(x)).Sum();

parallelStopwatch.Stop();

Console.WriteLine($"Parallel sum: {parallelSum}");

Console.WriteLine($"Parallel execution time: {parallelStopwatch.ElapsedMilliseconds} ms");

Console.ReadKey();

}

// Simulates a complex calculation that's CPU-intensive

private static long ComplexFunction(int number)

{

// Fake a CPU-bound operation without causing overflow

return (long)Math.Sqrt(number) + (number / 3);

}

}

}

Let’s break down the concepts applied in this example:

- Measuring Performance Baselines: Execution times for both sequential and parallel executions are measured using Stopwatch.

- Understand the Workload: The workload (ComplexFunction) is CPU-bound, making it suitable for parallelization.

- Optimize Data Partitioning: By default, PLINQ handles data partitioning. For specific cases, WithExecutionMode(ParallelExecutionMode.ForceParallelism) could force parallelism where PLINQ might not otherwise apply it.

- Choose the Right Merge Options: Merge options aren’t explicitly set in this simple example, but adjusting them with WithMergeOptions() could optimize performance for different scenarios.

- Manage Side Effects: The ComplexFunction is side-effect-free, performing a calculation based solely on input without altering the external state.

- Monitor and Address Thread Pool Usage: This example doesn’t specifically address thread pool usage, but in a real-world application, monitoring the thread pool might be necessary to avoid starvation.

- Use AsParallel Wisely: AsParallel() can improve performance for suitable tasks. It’s important to profile both with and without it to confirm its benefits.

- Profiling and Diagnostics: For more complex scenarios, you would use profiling tools to identify bottlenecks and performance issues.

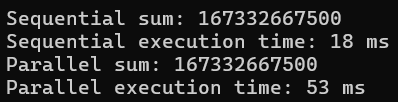

Now, run the application, and you will get the following output. The timing might be varied in your machine:

Understanding and Avoiding Common Problems in PLINQ Programming

When using PLINQ, developers might encounter several common problems. Understanding and avoiding these problems can help you write more efficient and error-free parallel code. Here’s a guide to some of the most common issues and how to address them:

Unnecessary Parallelization

- Problem: Not every query benefits from parallelization. For simple queries or operations on small data sets, the overhead of parallelization can actually result in worse performance than a sequential query.

- Avoidance: Only use PLINQ when the data set is large enough or the query is complex enough to justify the overhead. Benchmarking your code can help determine if parallelization is beneficial.

Assuming Order Preservation

- Problem: PLINQ queries do not guarantee that the order of the input sequence is preserved in the output. This can be problematic for operations where order matters.

- Avoidance: If the order of elements is important, you can use the AsOrdered method to preserve order. However, this may reduce the performance benefits of parallelization.

Improper Exception Handling

- Problem: When an exception occurs in a PLINQ query, it is thrown as an AggregateException containing all the exceptions thrown by the query. This can complicate exception handling.

- Avoidance: Use try-catch blocks to catch AggregateException and then iterate over its InnerExceptions property to handle individual exceptions as needed.

Over-Parallelization

- Problem: Using too many threads can lead to contention for resources and diminish returns. The .NET thread pool efficiently manages the number of threads, but overly aggressive parallelization can still lead to performance issues.

- Avoidance: Let PLINQ manage the degree of parallelism automatically, or use the WithDegreeOfParallelism method to limit the number of concurrent operations if you find over-parallelization is a problem.

Ignoring Side Effects

- Problem: PLINQ queries that produce side effects (modifying shared data structures, for instance) can result in unpredictable outcomes due to the concurrent execution of query steps.

- Avoidance: Avoid side effects in your queries. If you must modify data, ensure proper synchronization using locking mechanisms like lock in C# or use thread-safe collections.

Incorrect Use of Aggregation

- Problem: Incorrectly aggregating results in parallel queries can lead to incorrect results due to the concurrent modification of shared variables.

- Avoidance: Use PLINQ’s aggregation operations like Aggregate, which are designed to handle parallel execution correctly, or ensure that any custom aggregation logic is thread-safe.

Misunderstanding Cancellation Support

- Problem: PLINQ supports query cancellation through the CancellationToken mechanism. Not using or incorrectly using this feature can lead to unresponsive applications during long-running operations.

- Avoidance: Pass a CancellationToken to the PLINQ query to allow for responsive cancellation. Make sure to check the token periodically in your query if you’re implementing custom operators or long-running operations.

Example: Demonstrating PLINQ Problem

This example will show the common problems: incorrect handling of exceptions and side effects, which can lead to unpredictable results or application crashes.

using System;

using System.Linq;

namespace PLINQDemo

{

class Program

{

static void Main(string[] args)

{

var numbers = Enumerable.Range(1, 20);

try

{

numbers.AsParallel().ForAll(n =>

{

if (n == 10)

{

throw new InvalidOperationException("Just a test exception!");

}

Console.WriteLine($"Processing number: {n}");

});

}

catch (AggregateException ae)

{

Console.WriteLine("An aggregate exception occurred.");

foreach (var e in ae.InnerExceptions)

{

Console.WriteLine(e.Message);

}

}

Console.ReadKey();

}

}

}

Problem Demonstration:

- Side Effects: The Console.WriteLine within the ForAll action causes a side effect (outputting to the console), which might execute in an unpredictable order due to parallel execution.

- Exception Handling: An exception is thrown intentionally when processing the number 10. This exception is caught as part of an AggregateException, demonstrating how exceptions in PLINQ operations might be less straightforward to handle.

Example: Avoiding PLINQ Problems

Now, let’s refactor the code to avoid these pitfalls by properly handling exceptions and side effects.

using System;

using System.Linq;

namespace PLINQDemo

{

class Program

{

static void Main(string[] args)

{

var numbers = Enumerable.Range(1, 20);

// Using AsOrdered to maintain the order of processing

var orderedNumbers = numbers.AsParallel().AsOrdered();

try

{

orderedNumbers.ForAll(n =>

{

// Simulate work

if (n == 10)

{

throw new InvalidOperationException("Just a test exception!");

}

Console.WriteLine($"Processing number: {n}");

});

}

catch (AggregateException ae)

{

Console.WriteLine("Handling exceptions gracefully.");

ae.Handle(e =>

{

if (e is InvalidOperationException)

{

Console.WriteLine("Handled an InvalidOperationException.");

return true; // This exception is "handled"

}

return false; // This exception is not handled here

});

}

Console.ReadKey();

}

}

}

Improvements Made:

- Order Preservation: By using AsOrdered(), the output order is preserved, addressing the side effect issue in a predictable manner.

- Exception Handling: The exception handling mechanism now explicitly checks for InvalidOperationException and handles it gracefully, demonstrating a more refined approach to dealing with exceptions in PLINQ.

Profiling and Diagnosing PLINQ Applications

Profiling and Diagnosing PLINQ (Parallel Language Integrated Query) applications is crucial for ensuring optimal performance and scalability of your .NET applications that utilize parallel processing. PLINQ can significantly speed up data processing by utilizing multiple processors concurrently. However, effectively utilizing this requires understanding its behavior in your application and identifying bottlenecks or inefficiencies. Here’s a structured approach to profiling and diagnosing PLINQ applications:

Understanding PLINQ Basics

Before diving into profiling, ensure you have a solid understanding of how PLINQ works, its execution models (e.g., parallel vs. sequential fallback), and common operators. Knowing the fundamentals will help you better interpret profiling data and diagnose issues.

Establishing Baselines

- Performance Metrics: Collect baseline performance metrics without PLINQ to understand the potential benefits and to have a comparison point.

- Correctness Checks: Verify that the application produces the correct results both with and without PLINQ to ensure parallelization hasn’t introduced errors.

Profiling Tools

Several tools can help you profile and diagnose PLINQ applications:

- Visual Studio Diagnostic Tools: Use the Performance Profiler in Visual Studio to analyze CPU usage, memory allocation, and more. It can help identify hot paths and threads that are underutilized or overburdened.

- Concurrency Visualizer: An extension for Visual Studio that provides a detailed view of application threads, parallel tasks, and their interactions. It’s particularly useful for understanding how well PLINQ queries are parallelized.

- dotTrace and dotMemory by JetBrains: These tools offer profiling capabilities for .NET applications, providing insights into CPU usage, memory leaks, and threading issues.

Identifying Common Issues

When profiling and diagnosing PLINQ applications, look out for:

- Poor Query Parallelization: Sometimes, PLINQ might not parallelize a query as expected, leading to suboptimal performance. This can be due to the nature of the data, the operations being performed, or PLINQ’s own heuristics.

- Thread Pool Starvation: Heavy use of PLINQ can exhaust the thread pool, leading to delays in starting new tasks. Monitor thread pool usage and consider adjusting its size if necessary.

- Synchronization Overheads: Excessive use of locks or other synchronization primitives in a parallel query can severely limit its performance.

- Improper Use of PLINQ Features: Misusing certain PLINQ operators or not leveraging optimizations like AsSequential() where appropriate can degrade performance.

Optimization Strategies

Based on your findings, consider the following optimization strategies:

- Query Refactoring: Simplify or break down complex queries into more efficient, smaller operations.

- Custom Partitioners: Use or implement custom partitioners to improve data distribution across tasks.

- Adjust Degree of Parallelism: Use the WithDegreeOfParallelism method to limit or increase the number of processors that PLINQ uses.

- Data Structures: Opt for concurrent collections or data structures that minimize locking and contention.

Let’s walk through a simple example that utilizes PLINQ, showing how to profile and optimize it. We’ll create a program that processes a large list of numbers, performing a computationally intensive operation on each number in parallel, and then we’ll discuss how to diagnose and optimize its performance.

Edit the Program.cs file to include the following code, which uses PLINQ to calculate the square of each number in a large range and filters out even squares:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

namespace PLINQDemo

{

class Program

{

static async Task Main(string[] args)

{

try

{

// Asynchronously fetch data

List<int> numbers = await FetchDataAsync();

// Use PLINQ to process data in parallel and asynchronously

var processedNumbers = await Task.Run(() =>

numbers.AsParallel()

.Where(n => IsPrime(n)) // For example, filter prime numbers

.Select(n => n * 2) // For example, double each number

.ToList());

// Display the results

Console.WriteLine("Processed Numbers:");

processedNumbers.ForEach(Console.WriteLine);

}

catch (AggregateException e)

{

foreach (var inner in e.InnerExceptions)

{

Console.WriteLine(inner.Message);

}

}

Console.ReadKey();

}

static async Task<List<int>> FetchDataAsync()

{

// Simulate an asynchronous data fetch operation

return await Task.Run(() =>

{

var numbers = Enumerable.Range(1, 100000).ToList();

return numbers;

});

}

static bool IsPrime(int number)

{

if (number <= 1) return false;

if (number == 2) return true;

if (number % 2 == 0) return false;

var boundary = (int)Math.Floor(Math.Sqrt(number));

for (int i = 3; i <= boundary; i += 2)

{

if (number % i == 0) return false;

}

return true;

}

}

}

Output: Processing completed in 188 ms. Found 500000 even squares.

Initial Profiling

Before profiling, run the application to ensure it works correctly and note the execution time. To profile the application in Visual Studio:

- Open Visual Studio and load your project.

- Go to Debug > Performance Profiler….

- Choose the CPU Usage tool and click Start.

- After the run, analyze the CPU usage to identify hot paths and potential bottlenecks.

Identifying Issues

From the profiling, you might notice that the CPU usage is high, indicating that the application is computationally intensive but may not evenly distribute work across cores, or there might be overheads that we can optimize.

Optimization

Based on profiling results, consider optimizations. For example, you could experiment with custom partitioners or adjust the degree of parallelism. For a better understanding, please modify the Program.cs class file as follows:

using System;

using System.Linq;

namespace PLINQDemo

{

class Program

{

static void Main(string[] args)

{

// Generate a large list of integers

var numbers = Enumerable.Range(1, 1000000);

// Use PLINQ to square the numbers and filter even results

var query = numbers

.AsParallel()

.WithExecutionMode(ParallelExecutionMode.ForceParallelism)

.WithDegreeOfParallelism(Environment.ProcessorCount) // Adjust based on your system

.Select(n => n * n)

.Where(square => square % 2 == 0);

// Force PLINQ query execution and measure time

var watch = System.Diagnostics.Stopwatch.StartNew();

var evenSquares = query.ToList();

watch.Stop();

Console.WriteLine($"Processing completed in {watch.ElapsedMilliseconds} ms. Found {evenSquares.Count} even squares.");

Console.ReadKey();

}

}

}

After applying optimizations, re-profile the application to compare the results. You should see a difference in execution patterns and possibly improved performance.

Understanding and Using PLINQ Execution Modes

Understanding and Using PLINQ execution modes is crucial for developers looking to optimize their applications. Here’s a comprehensive overview:

Understanding PLINQ

PLINQ is part of the Task Parallel Library (TPL) and enables LINQ queries to run in parallel, automatically distributing the work across multiple threads. It is most beneficial when working with large collections or performing complex operations that can be parallelized.

Execution Modes in PLINQ

PLINQ operates in two primary execution modes:

- Default Execution Mode: By default, PLINQ queries execute in a mode that attempts to balance between performance and preserving the order of the source sequence. This means that PLINQ will parallelize operations where it can but may choose not to parallelize certain operations if the overhead of parallelization outweighs its benefits.

- Forced Parallel Execution: You can force PLINQ to parallelize queries by using the WithExecutionMode method and specifying ParallelExecutionMode.ForceParallelism. This mode instructs PLINQ to parallelize the query regardless of its nature or the size of the data source. It’s useful when you are confident that parallel execution will improve performance, but it should be used judiciously as it can lead to increased overhead in some cases.

Using Execution Modes

Understanding when and how to use these execution modes can significantly impact the performance of your application. Here are some tips:

- Analyze the Query: Before forcing parallelism, analyze the query to ensure that it is a good candidate for parallelization. Large data processing tasks with independent iterations are ideal.

- Benchmark: Always benchmark the performance of your PLINQ queries in both default and forced parallel execution modes. What works better in one scenario might not work in another.

- Consider Data Size: Smaller data sets may not benefit from parallelization due to the overhead of thread management. Parallelization is generally more effective for larger data sets.

- Preserve Order When Needed: Use AsOrdered to preserve the order of the source sequence if the order is important for subsequent operations or output. Be aware that ordering can reduce parallel efficiency.

- Handle Exceptions: Parallel queries can throw AggregateException when exceptions occur in multiple tasks. Ensure your code is prepared to handle these exceptions appropriately.

Let’s create a .NET Console Application example that demonstrates the use of PLINQ, focusing on understanding the execution modes, specifically the default execution mode and the forced parallel execution mode. This example will compare the performance and ordering implications of each mode.

We will perform a simple operation on a large collection of integers, filtering them based on whether they are prime numbers. We will compare the execution time and order of results using default execution mode versus forced parallel execution mode.

using System;

using System.Diagnostics;

using System.Linq;

namespace PLINQDemo

{

class Program

{

//Main Method: Default vs. Forced Parallel Execution

static void Main(string[] args)

{

var numbers = Enumerable.Range(1, 100000);

// Measure execution time with default execution mode

var stopwatch = Stopwatch.StartNew();

var primeNumbersDefault = numbers.AsParallel()

.Where(IsPrime)

.ToList();

stopwatch.Stop();

Console.WriteLine($"Default Execution Time: {stopwatch.ElapsedMilliseconds} ms");

// Measure execution time with forced parallel execution

stopwatch.Restart();

var primeNumbersForced = numbers.AsParallel()

.WithExecutionMode(ParallelExecutionMode.ForceParallelism)

.Where(IsPrime)

.ToList();

stopwatch.Stop();

Console.WriteLine($"Forced Parallel Execution Time: {stopwatch.ElapsedMilliseconds} ms");

// Optional: Compare the ordering and count

Console.WriteLine($"Prime numbers count (Default): {primeNumbersDefault.Count}");

Console.WriteLine($"Prime numbers count (Forced): {primeNumbersForced.Count}");

Console.ReadKey();

}

//Helper Method: Checking Prime Numbers

//This is a CPU-intensive operation, making it a good candidate for parallelization.

public static bool IsPrime(int number)

{

if (number < 2) return false;

for (int i = 2; i <= Math.Sqrt(number); i++)

{

if (number % i == 0) return false;

}

return true;

}

}

}

When you run this application, it will:

- Generate a sequence of integers.

- Use PLINQ to filter out prime numbers first in the default execution mode, then with forced parallelism.

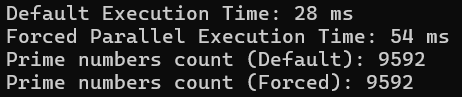

- Measure and display the time taken for each operation, as shown in the below image. The time will vary on your machine.

Observations

- Performance: You may notice a significant performance improvement when using PLINQ for CPU-bound operations like prime number checking. The exact improvement will depend on your system’s CPU.

- Ordering: The default execution mode tries to preserve the order of the original collection, which might not be strictly maintained in the forced parallel execution mode, especially when using methods that can change the order of elements.

- Execution Time: Forced parallelism may not always be faster, especially for operations with overhead or smaller data sets. The execution time can vary, highlighting the importance of benchmarking with your specific data and operations.

Custom Partitioners for Optimal Data Distribution in LINQ

Custom partitioners in LINQ (Language Integrated Query) can significantly enhance the performance and efficiency of your parallel processing tasks by ensuring an optimal distribution of data across multiple threads or tasks. Custom partitioners allow you to control how data is divided and assigned to parallel operations, which is crucial for achieving high performance in data-intensive applications. This becomes especially important when dealing with unevenly distributed data or when certain operations require more processing power than others.

Understanding Partitioning in LINQ

Partitioning refers to the process of dividing data into smaller subsets (partitions) that can be processed in parallel. The default partitioner in LINQ attempts to distribute data across available partitions evenly, but it might not always be optimal for every scenario. For example, if some data elements require significantly more processing time than others, the default partitioner could lead to an imbalance where some threads are overburdened while others are underutilized.

Why Use Custom Partitioners in LINQ?

Custom partitioners are used when the default partitioning behavior does not meet the specific needs of your application. Reasons to use custom partitioners include:

- Non-uniform Data: When data elements vary significantly in size or complexity, custom partitioning can ensure that each partition has a roughly equal amount of work.

- Control Over Data Locality: For operations that benefit from data locality (such as those involving caches), custom partitioners can ensure that related data elements are processed together.

- Specialized Data Structures: If your data is stored in a specialized data structure, a custom partitioner can be designed to efficiently divide that data structure for parallel processing.

Implementing a Custom Partitioner in LINQ

Implementing a custom partitioner involves creating a class that inherits from the Partitioner<T> class and overriding its methods to define how data is partitioned. Here’s a basic outline of the steps involved:

- Inherit from Partitioner<T>: Create a class that inherits from Partitioner<T>, where T is the type of elements in the collection to be partitioned.

- Override GetPartitions: This method returns an IEnumerable<IEnumerator<T>>, which represents a collection of enumerators, each corresponding to a partition of the original data.

- Implement Partitioning Logic: Inside GetPartitions, implement your custom partitioning logic to divide the input data as required.

Example: Custom Range Partitioner

Let’s create a simple .NET Console Application that demonstrates how to use a custom partitioner. In this example, we will focus on a task that processes a range of integers. We will use a custom partitioner to distribute the workload evenly based on the sum of integers.

Imagine we have a range of integers from 1 to 10,000, and we want to process each integer by performing a simple operation (e.g., squaring each integer). However, the processing time for each integer is simulated to be proportional to its value, meaning higher integers take longer to process. Our goal is to distribute the integers across different tasks so that each task has approximately the same amount of work.

using System;

using System.Collections.Concurrent;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

namespace PLINQDemo

{

class Program

{

//Main Method: Default vs. Forced Parallel Execution

static void Main(string[] args)

{

// Create an instance of the custom partitioner

var partitioner = new RangePartitioner(1, 10000, Environment.ProcessorCount);

// Use the custom partitioner in a Parallel LINQ (PLINQ) query

var results = partitioner.AsParallel()

.Select(x =>

{

// Simulate work by squaring the integer

int result = x * x;

Console.WriteLine($"Processed {x} in Task {Task.CurrentId}");

return result;

}).ToList();

Console.WriteLine("Processing complete. Press any key to exit.");

Console.ReadKey();

}

}

public class RangePartitioner : Partitioner<int>

{

private readonly int _from;

private readonly int _to;

private readonly int _partitionCount;

public RangePartitioner(int from, int to, int partitionCount)

{

_from = from;

_to = to;

_partitionCount = partitionCount;

}

public override IList<IEnumerator<int>> GetPartitions(int partitionCount)

{

var partitions = new List<IEnumerator<int>>(partitionCount);

int totalElements = _to - _from + 1;

int elementsPerPartition = totalElements / partitionCount;

int remainingElements = totalElements % partitionCount;

int currentStart = _from;

for (int i = 0; i < partitionCount; i++)

{

int elementsInThisPartition = elementsPerPartition + (i < remainingElements ? 1 : 0);

int currentEnd = currentStart + elementsInThisPartition;

partitions.Add(EnumerateRange(currentStart, currentEnd).GetEnumerator());

currentStart = currentEnd;

}

return partitions;

}

private IEnumerable<int> EnumerateRange(int start, int end)

{

for (int i = start; i < end; i++)

{

yield return i;

}

}

}

}

When you run the application, it will process each integer in the range, squaring it. The Console.WriteLine within the Select method demonstrates that the work is distributed across multiple tasks, thanks to our custom partitioner.

The output will show integers being processed by different tasks. If you observe the task IDs, you’ll notice the distribution of work across them, which demonstrates how the custom partitioner is functioning. The processing of integers won’t necessarily be in sequential order due to the parallel execution.

Best Practices for Writing Efficient and Maintainable PLINQ Code

Parallel LINQ (PLINQ) is a parallel implementation of LINQ to Objects and aims to make it easier to write parallel and high-performance code in .NET. However, writing efficient and maintainable PLINQ code requires following some best practices. Here are key guidelines to consider:

- Understand the Data and Workload: Before parallelizing with PLINQ, understand the nature of your data and the workload. Parallelization is most beneficial when the workload involves CPU-bound operations and operates on large datasets. For I/O-bound operations or small datasets, the overhead of parallelization might not be justified.

- Use AsParallel Wisely: The AsParallel method is used to parallelize queries. However, not all queries benefit from parallel execution. Evaluate whether the performance benefits of parallelization outweigh the overhead. Testing with and without AsParallel can provide insights.

- Preserve Order Sparingly: By default, PLINQ does not preserve the order of the source sequence. You can use AsOrdered to preserve order but at a potential performance cost. Only use AsOrdered when the order of elements is crucial to the outcome of the query.

- Limit Degree of Parallelism: PLINQ automatically determines the number of threads to use for a query, but you can override this with WithDegreeOfParallelism. This can be useful to prevent oversubscription of resources or to limit CPU usage. However, manually setting the degree of parallelism can also lead to suboptimal performance if not done carefully.

- Handle Exceptions Properly: PLINQ queries can throw AggregateException when one or more exceptions occur during query execution. Handle exceptions by catching AggregateException and inspecting its InnerExceptions property to deal with individual exceptions as needed.

- Use Cancellation Tokens: Long-running PLINQ queries can be made cancellable by passing a CancellationToken to WithCancellation. This allows you to gracefully cancel query execution in response to user actions or other events.

- Avoid Shared State: PLINQ queries should avoid modifying shared state to prevent race conditions and data corruption. Instead, focus on producing pure functions that do not have side effects. If the shared state is unavoidable, use synchronization primitives to manage access, but be aware that this can impact performance.

- Profile and Measure: Use profiling tools to measure the performance of your PLINQ queries. Performance improvements from parallelization can vary based on many factors, including data size, CPU characteristics, and workload nature. Profiling helps identify bottlenecks and areas for optimization.

- Consider Alternative Approaches: In some cases, other parallelization techniques or libraries might be more suitable than PLINQ for your specific needs. For example, the Task Parallel Library (TPL) provides more control over low-level parallelism and asynchronous execution.

- Document and Review: Document your PLINQ queries and the reasons for choosing parallelization. Regularly review your PLINQ usage as part of your code maintenance process to ensure it remains the best choice as your application evolves.

Example:

Imagine we have a collection of file objects, each representing a text file with properties for FileName and Content. Our goal is to parallelize the operation of analyzing the content of each file to count the number of words, simulating a CPU-bound task.

using System;

using System.Linq;

namespace PLINQDemo

{

class Program

{

static void Main(string[] args)

{

// Simulate a list of file objects

var files = Enumerable.Range(1, 100).Select(i => new File

{

FileName = $"File{i}.txt",

Content = new String('A', i * 1000) // Simulating file content with repeated characters

}).ToList();

try

{

// Process files in parallel

var processedFiles = files.AsParallel()

.WithDegreeOfParallelism(Environment.ProcessorCount) // Optimize resource usage

.Select(file => new

{

file.FileName,

WordCount = CountWords(file.Content)

});

// Output results

foreach (var file in processedFiles)

{

Console.WriteLine($"{file.FileName} has {file.WordCount} words.");

}

}

catch (AggregateException ae)

{

// Handle exceptions

foreach (var e in ae.InnerExceptions)

{

Console.WriteLine($"An error occurred: {e.Message}");

}

}

Console.ReadKey();

}

// A dummy method to simulate word count in a file's content

static int CountWords(string content)

{

// Simple word count simulation: assuming each 5 characters form a word

return content.Length / 5;

}

class File

{

public string FileName { get; set; }

public string Content { get; set; }

}

}

}

Key Points in This Example

- Custom Object Processing: This example moves beyond simple integers to work with a collection of custom objects, demonstrating how PLINQ can be applied to a wide range of data types and scenarios.

- Simulated CPU-bound Task: The CountWords method simulates a CPU-bound operation that might be applied to each item in a collection, akin to processing or analyzing file content in a real application.

- Parallel Processing Benefits: By processing the files in parallel (AsParallel), we aim to significantly speed up the overall processing time, especially useful when dealing with large datasets or intensive computations.

- Exception Handling with AggregateException: Demonstrates how to handle exceptions that might occur during parallel processing, ensuring the application can gracefully manage errors.

In the next article, I will discuss Debugging and Troubleshooting PLINQ Applications with Examples. In this article, I explain the Performance Considerations and Best Practices of LINQ with Examples. I hope you enjoy this Performance Considerations and Best Practices of LINQ with Examples article.

Registration Open – Microservices with ASP.NET Core Web API

Session Time: 6:30 AM – 8:00 AM IST

Advance your career with our expert-led, hands-on live training program. Get complete course details, the syllabus, registration, and Zoom credentials for demo sessions via the links below.