Back to: ADO.NET Core Tutorial For Beginners and Professionals

Performance Improvements in ADO.NET Core

In this article, I will discuss Performance Improvements in ADO.NET Core with Examples. Please read our previous article discussing Dynamic SQL using ADO.NET Core with Examples.

ADO.NET Core Performance Improvements

When working with ADO.NET Core in a .NET Core Application, developers often face performance challenges related to inefficient database operations, improper resource management, and redundant network calls. These inefficiencies can result in slower application performance, higher resource consumption, and increased server load. Understanding the common coding problems that cause performance degradation and how to overcome them is critical to optimizing your application’s efficiency. In this post, we aim to cover those problems and provide solutions for improving performance.

Inefficient Data Retrieval

Problem:

One of the most common performance bottlenecks in ADO.NET Core applications is inefficient data retrieval. This can happen when the application retrieves more data than needed, or when queries are not optimized.

Solution:

- Use SELECT Statements Wisely: Only retrieve the columns that are needed for the operation. Avoid SELECT * and instead specify the exact columns in your query.

- Pagination: For large datasets, use pagination (using OFFSET and FETCH) to load only a subset of data at a time.

- Filtering: Always apply filters (WHERE clause) to restrict the data set to only the relevant rows.

Example:

SELECT Id, FirstName, LastName, FROM Employees WHERE Department = 'HR' ORDER BY LastName OFFSET 0 ROWS FETCH NEXT 100 ROWS ONLY;

Inefficient Use of Connections

Problem:

Opening and closing database connections frequently without proper management can degrade performance. Each connection incurs a network round-trip and requires resources.

Solution:

- Connection Pooling: Use ADO.NET’s connection pooling to minimize the overhead of opening and closing connections. By default, ADO.NET manages a pool of reusable connections, which significantly reduces connection-related overhead.

- Using “using” Statements: Always use using statements for connections, commands, and data readers to ensure that connections are properly closed and returned to the pool, even in case of an exception.

Example:

using (var connection = new SqlConnection(connectionString))

{

connection.Open();

// Execute your queries

}

Blocking and Deadlocks

Problem:

Deadlocks or blocking can occur if multiple threads or operations try to access the same resources concurrently, especially in high-traffic applications.

Solution:

- Use Asynchronous Methods: To avoid blocking the main thread, use asynchronous methods like ExecuteReaderAsync, ExecuteNonQueryAsync, and ExecuteScalarAsync. This will allow the application to handle more operations concurrently without waiting for database queries to complete.

- Locking: Avoid excessive use of LOCK or WITH (NOLOCK) queries, as they can lead to blocking or inconsistent results. Implement proper transaction isolation levels to avoid unnecessary locks.

Example:

using (var connection = new SqlConnection(connectionString))

{

await connection.OpenAsync();

var command = new SqlCommand("SELECT * FROM Orders", connection);

using (var reader = await command.ExecuteReaderAsync())

{

while (await reader.ReadAsync())

{

// Process data

}

}

}

Not Using Stored Procedures

Problem:

A common mistake is relying on inline SQL queries instead of using stored procedures. Inline queries can be less optimized, harder to maintain, and vulnerable to SQL injection.

Solution:

- Stored Procedures: Use stored procedures to encapsulate your SQL logic. Stored procedures are precompiled, which means that the database engine does not have to re-parse the SQL each time the procedure is executed.

- Parameterization: Always use parameters in your stored procedures to prevent SQL injection attacks and improve query performance by reusing execution plans.

Example:

using (var command = new SqlCommand("usp_GetEmployeeDetails", connection))

{

command.CommandType = CommandType.StoredProcedure;

command.Parameters.AddWithValue("@EmployeeId", 101);

var reader = command.ExecuteReader();

// Handle results

}

Inefficient Data Handling with DataSets and DataTables

Problem:

Using DataSet and DataTable can introduce unnecessary overhead, especially when dealing with large amounts of data. These objects keep a lot of unnecessary metadata that consumes memory and slows down performance.

Solution:

- Avoid DataSet for Simple Queries: For simpler queries, use SqlDataReader instead of DataSet or DataTable, as it is faster and uses less memory. SqlDataReader works in a forward-only, read-only manner, making it efficient for large result sets.

- Use DataTable Only When Necessary: If you need to work with multiple result sets or need to perform complex manipulations, consider using DataTable, but avoid loading unnecessary rows and columns.

Example:

using (var command = new SqlCommand("SELECT * FROM Orders", connection))

{

using (var reader = command.ExecuteReader())

{

while (reader.Read())

{

// Process row data

}

}

}

Excessive Round Trips to the Database

Problem:

Making multiple small queries to the database can significantly increase the overhead due to the network round-trip and transaction handling.

Solution:

- Batch Queries: Combine multiple queries into a single transaction or use batch execution where possible. This reduces the number of round trips to the database.

- Stored Procedures for Multiple Operations: Consider using stored procedures for complex operations that involve multiple steps, as this will allow you to execute them as a single transaction.

Example:

using (var command = new SqlCommand("usp_UpdateEmployeeAndSalary", connection))

{

command.CommandType = CommandType.StoredProcedure;

command.Parameters.AddWithValue("@EmployeeId", 101);

command.Parameters.AddWithValue("@NewSalary", 50000);

command.ExecuteNonQuery();

}

Unprepared Commands

Problem:

Failing to call SqlCommand.Prepare() when executing the same command repeatedly. Impact: Each execution forces the server to recompile and optimize the command, adding unnecessary overhead on CPU and network.

Solution:

- Call command.Prepare() once before executing the command multiple times to cache the execution plan and reduce server‑side work.

- Reuse the same SqlCommand instance when looping.

Example:

using (var conn = new SqlConnection(connectionString))

{

conn.Open();

using (var cmd = new SqlCommand("INSERT INTO AuditLog(Event, CreatedAt) VALUES (@Event, @CreatedAt)", conn))

{

cmd.Parameters.Add(new SqlParameter("@Event", SqlDbType.VarChar, 200));

cmd.Parameters.Add(new SqlParameter("@CreatedAt", SqlDbType.DateTime));

cmd.Prepare(); // Cache the execution plan once

foreach (var evt in events)

{

cmd.Parameters["@Event"].Value = evt;

cmd.Parameters["@CreatedAt"].Value = DateTime.UtcNow;

cmd.ExecuteNonQuery(); // Fast, reused plan

}

}

}

Inefficient Query Design and Indexing

Problem:

Inefficient SQL query design, such as missing indexes or non-optimal queries, can severely degrade performance, particularly on larger datasets.

Solution:

- Optimize Queries: Use proper indexing and query design to reduce execution times. Ensure that the queries are using indexes efficiently and avoid operations that lead to full table scans.

Example:

CREATE INDEX idx_Employee_Department ON Employees(Department);

Opening and Closing Connections Inside Loops

Problem:

Opening and closing a SqlConnection on every record or iteration adds TCP handshakes and authentication overhead.

foreach (var id in ids)

{

using var conn = new SqlConnection(connString);

conn.Open();

using var cmd = conn.CreateCommand();

cmd.CommandText = "SELECT Name FROM Users WHERE Id = @Id";

cmd.Parameters.AddWithValue("@Id", id);

var name = cmd.ExecuteScalar();

Console.WriteLine(name);

}

Each Open()/Close() can take tens to hundreds of milliseconds under load.

Solution:

Open the connection once, outside the loop, and reuse it.

using var conn = new SqlConnection(connString);

await conn.OpenAsync();

using var cmd = conn.CreateCommand();

cmd.CommandText = "SELECT Name FROM Users WHERE Id = @Id";

var idParam = cmd.Parameters.Add("@Id", SqlDbType.Int);

foreach (var id in ids)

{

idParam.Value = id;

var name = await cmd.ExecuteScalarAsync();

Console.WriteLine(name);

}

Executing Individual Inserts/Updates Instead of Bulk

Problem:

Looping thousands of single‐row INSERT statements multiplies round trips.

foreach (var record in records)

{

using var cmd = new SqlCommand("INSERT … VALUES (@…)", conn);

// add parameters…

await cmd.ExecuteNonQueryAsync();

}

Solutions:

SqlBulkCopy for inserts:

using var bulk = new SqlBulkCopy(conn); bulk.DestinationTableName = "MyTable"; await bulk.WriteToServerAsync(myDataTable);

Table‑Valued Parameters with a single command:

{

TypeName = "dbo.MyItemType",

Value = myDataTable

};

cmd.CommandText = "EXEC usp_InsertItems @Items";

cmd.Parameters.Add(tvp);

await cmd.ExecuteNonQueryAsync();

SQL Server Database Setup

Please run this script in SQL Server Management Studio to create the database, tables, and insert dummy data into the tables for testing purposes.

-- Create OrdersDB database

CREATE DATABASE OrdersDB;

GO

-- Swich to OrdersDB database

USE OrdersDB;

GO

-- Create Employees table

CREATE TABLE Employees (

EmployeeId INT IDENTITY(1,1) PRIMARY KEY,

FirstName NVARCHAR(50),

LastName NVARCHAR(50),

Department NVARCHAR(50)

);

-- Create Orders table

CREATE TABLE Orders (

OrderId INT IDENTITY(1,1) PRIMARY KEY,

EmployeeId INT FOREIGN KEY REFERENCES Employees(EmployeeId),

OrderDate DATETIME,

Amount DECIMAL(10,2)

);

-- Insert dummy data for Employees

-- Prevents SQL Server from returning the "x rows affected" message for each insert.

-- This makes the loop run faster and produces cleaner output.

SET NOCOUNT ON;

-- Declares and initializes a loop counter variable @i with value 1

DECLARE @i INT = 1;

-- Begins a loop that will run 1000 times to insert 1000 employee records.

WHILE @i <= 1000

-- Starts the block of code to execute during each loop iteration.

BEGIN

-- Inserts a new row into Employees.

-- This ensures an even distribution of employees across 3 departments.

INSERT INTO Employees (FirstName, LastName, Department)

VALUES (

CONCAT('First', @i),

CONCAT('Last', @i),

CASE WHEN @i % 3 = 0 THEN 'HR'

WHEN @i % 3 = 1 THEN 'Sales'

ELSE 'IT' END

);

-- Increments the loop counter by 1.

SET @i += 1;

-- Ends the loop block for inserting into Employees.

END

-- Insert dummy data for Orders

-- Resets the loop counter @i to 1 before the next loop starts.

SET @i = 1;

-- Starts a loop that will run 5000 times to insert 5000 order records.

WHILE @i <= 5000

-- Begins the block of code for the loop body.

BEGIN

INSERT INTO Orders (EmployeeId, OrderDate, Amount)

VALUES (

-- NEWID() generates a unique value each time.

-- CHECKSUM(NEWID()) gives a seed for RAND().

-- RAND(seed) produces a pseudo-random float between 0 and 1.

-- Multiply by 1000, floor it, then add 1 → gives a random employee ID between 1 and 1000 (assuming those are valid EmployeeIds).

FLOOR(RAND(CHECKSUM(NEWID())) * 1000) + 1,

-- Generates a random number of days between 0–364.

-- Subtracts that many days from today.

-- Result: Random order date within the last year.

DATEADD(DAY, - (FLOOR(RAND(CHECKSUM(NEWID())) * 365)), GETDATE()),

-- Generates a random decimal number between 0 and 1000.

RAND(CHECKSUM(NEWID())) * 1000

);

SET @i += 1;

END

GO

Bad Code Example with Performance Issues:

The following example demonstrates how the bad code might look that violates best practices and demonstrates performance degradation. The example code is self-explained, so please read the comment lines for a better understanding.

using Microsoft.Data.SqlClient;

using System.Diagnostics;

namespace PerfermanceBenchmark

{

public class Program

{

static void Main()

{

// Connection string to OrdersDB

string connectionString = "Server=LAPTOP-6P5NK25R\\SQLSERVER2022DEV;Database=OrdersDB;Trusted_Connection=True;TrustServerCertificate=True;";

var stopwatch = new Stopwatch();

// Individual INSERTs inside a loop, opening/closing each time:

stopwatch.Start();

for (int i = 1; i <= 10000; i++)

{

// Opening and closing connection on each iteration — very costly

var conn = new SqlConnection(connectionString);

conn.Open();

// Inline SQL, no parameters — potential SQL injection and no prep

var cmd = new SqlCommand(

$"INSERT INTO Employees (FirstName, LastName, Department) VALUES ('BFirst{i}','BLast{i}','IT')",

conn);

cmd.ExecuteNonQuery(); // synchronous call blocks thread

conn.Close(); // returns to pool but still overhead

}

stopwatch.Stop();

Console.WriteLine($"Bad Insert Loop: {stopwatch.ElapsedMilliseconds} ms");

stopwatch.Reset();

// Repeated SELECT inside loop with Open/Close and no filtering:

stopwatch.Start();

foreach (var empId in new int[] { 10, 20, 30, 40, 50 })

{

using (var conn = new SqlConnection(connectionString))

{

conn.Open(); // open per query

var cmd = new SqlCommand("SELECT * FROM Employees", conn);

using (var reader = cmd.ExecuteReader()) // no WHERE clause, returns all rows each time

{

while (reader.Read())

{

// Just iterating—wasted work

}

}

}

}

stopwatch.Stop();

Console.WriteLine($"Bad Select Loop: {stopwatch.ElapsedMilliseconds} ms");

}

}

}

Common Problems:

- Opening and closing the SqlConnection on every iteration leads to multiple network round-trips.

- Inline SQL string concatenation (no parameters, no Prepare()).

- Synchronous ExecuteNonQuery/ExecuteReader blocking calls.

- Running a SELECT query in every loop iteration (for each ID) is inefficient, as it increases network traffic and server load.

- Using SELECT * fetches all columns, including unnecessary data, which is not optimal for performance.

- Selecting all rows repeatedly with no filter.

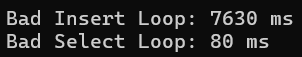

Output:

Good Code Example with Performance Improvements

Now, let’s optimize the previous example by implementing best practices. The example code is self-explained, so please read the comment lines for a better understanding.

using Microsoft.Data.SqlClient;

using System.Data;

using System.Diagnostics;

namespace PerfermanceBenchmark

{

public class Program

{

static async Task Main()

{

// Connection string to OrdersDB

string connectionString = "Server=LAPTOP-6P5NK25R\\SQLSERVER2022DEV;Database=OrdersDB;Trusted_Connection=True;TrustServerCertificate=True;";

var stopwatch = new Stopwatch();

// Bulk insert via SqlBulkCopy

var dataTable = new DataTable();

dataTable.Columns.Add("FirstName", typeof(string));

dataTable.Columns.Add("LastName", typeof(string));

dataTable.Columns.Add("Department", typeof(string));

for (int i = 1; i <= 10000; i++)

{

dataTable.Rows.Add($"First{i}", $"Last{i}", "IT");

}

stopwatch.Start();

using (var conn = new SqlConnection(connectionString))

{

await conn.OpenAsync(); // one open

using (var bulk = new SqlBulkCopy(conn))

{

bulk.DestinationTableName = "Employees";

await bulk.WriteToServerAsync(dataTable);

}

}

stopwatch.Stop();

Console.WriteLine($"Good Bulk Insert: {stopwatch.ElapsedMilliseconds} ms");

stopwatch.Reset();

// Prepared, parameterized, async SELECT with reuse

stopwatch.Start();

using (var conn = new SqlConnection(connectionString))

{

await conn.OpenAsync(); // one open

var cmd = new SqlCommand(

"SELECT FirstName, LastName FROM Employees WHERE EmployeeId = @Id", conn);

cmd.Parameters.Add("@Id", SqlDbType.Int);

cmd.Prepare(); // compile once

foreach (var id in new int[] { 10, 20, 30, 40, 50 })

{

cmd.Parameters["@Id"].Value = id;

using (var reader = await cmd.ExecuteReaderAsync()) // async call

{

while (await reader.ReadAsync())

{

// process single row

}

}

}

}

stopwatch.Stop();

Console.WriteLine($"Good Select Loop: {stopwatch.ElapsedMilliseconds} ms");

}

}

}

Optimizations Applied

- The connection is opened once outside the loop and reused, avoiding multiple opening/closing operations.

- Bulk insert via SqlBulkCopy instead of 10000 individual INSERTs.

- The use of OpenAsync, WriteToServerAsync, and ExecuteReaderAsync ensures that the main thread isn’t blocked, and the application can handle more tasks concurrently.

- Parameterized commands with Prepare(), avoiding re‑compilation.

- Only the necessary columns are retrieved (SELECT Id, Name), improving performance by reducing the data load.

- The command is parameterized properly, preventing SQL injection and improving query plan reuse.

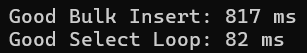

Output:

Expected Performance Improvement

The optimized version minimizes:

- Round Trips: By opening the connection once and reusing it for all queries, the application reduces the overhead associated with network round-trip.

- Inefficient Queries: By only selecting required columns and rows, the system loads less data, which reduces memory usage and speeds up processing.

- Blocking: Asynchronous execution improves scalability by freeing up the main thread, enabling parallel processing of tasks.

By Comparing Both Versions:

Optimizing performance in ADO.NET Core applications involves a careful balance between efficient query design, resource management, and proper usage of the tools at your disposal. By addressing common issues such as inefficient data retrieval, unnecessary database connections, and excessive round trips, you can significantly improve your application’s speed and scalability.

In the next article, I will discuss ADO.NET Core Using Dependency Injection with Examples. In this article, I explain Performance Improvements in ADO.NET Core with examples. I hope you enjoy this article on Performance Improvements in ADO.NET Core with Examples.